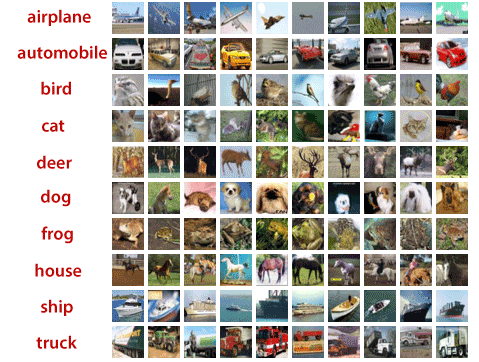

CIFAR-10 and CIFAR-100 Dataset in TensorFlow

The CIFAR-10 (Canadian Institute for Advanced Research) and CIFAR-100 are labeled subsets of the 80 million tiny images dataset. They were collected by Alex Krizhevsky, Geoffrey Hinton and Vinod Nair. The dataset is divided into five training batches and only one test batch, each with 10000 images.

The test batch contains 1000 randomly-selected images from each class. The training batches contain the remaining images in a random order, but some training batches contain the remaining images in a random order, but some training batches contain more images from one class to another. Between them, the training batches contain exactly 5000 images for each class.

The classes will be entirely mutually exclusive. There will be no overlapping between automobiles and trucks. Automobiles include things which are similar to sedans and SUVs. Trucks class includes only big trucks, and it neither includes pickup trucks. If we are looked through the CIFAR dataset, we realize that is not just one type of bird or cat. The bird and cat class contains many different types of birds and cat. The bird and Cat class provide many kinds of birds and cat varying in size, color, magnification, different angles, and different poses.

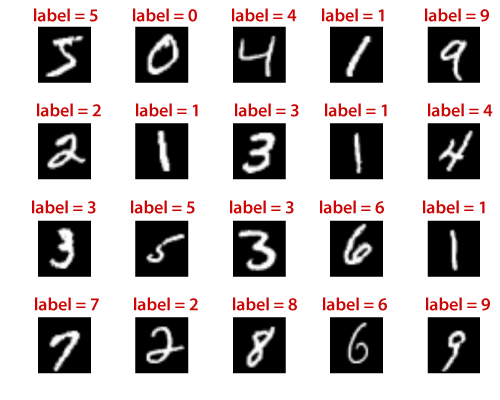

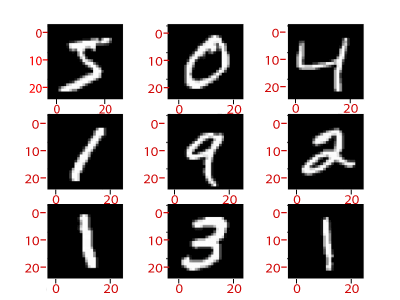

With endless datasets, there are many ways by which we can write number one and number two. It just wasn’t as diverse, and on top of that, the endless dataset is a gray scalar. The CIFAR dataset consists of 32 larger by 32 color images, and each photograph with three different color channels. Now our most important question is that the LeNet model that has performed so well on an endless dataset will it be enough to classify the CIFAR dataset?

CIFAR-100 Dataset

It is just like CIFAR-10 dataset. The only difference is that it has 100 classes containing 600 images per class. There are 100 testing images and 500 training images in per class. These 100 classes are grouped into 20 superclasses, and each image comes with a “coarse” label (the superclass to which it belongs) and a “fine” label (the class which it belongs to) and a “fine” label (the class to which it belongs to).

Below classes in the CIFAR-100 dataset:

| S. No | Superclass | Classes |

|---|---|---|

| 1. | Flowers | Orchids, poppies, roses, sunflowers, tulips |

| 2. | Fish | Aquarium fish, flatfish, ray, shark, trout |

| 3. | Aquatic mammals | Beaver, dolphin, otter, seal, whale |

| 4. | food containers | Bottles, bowls, cans, cups, plates |

| 5. | Household electrical devices | Clock, lamp, telephone, television, computer keyboard |

| 6. | Fruit and vegetables | Apples, mushrooms, oranges, pears, sweet peppers |

| 7. | Household furniture | Table, Chair, couch, wardrobe, bed, |

| 8. | Insects bee, beetle, butterfly, caterpillar, cockroach | |

| 9. | Large natural outdoor scenes | Cloud, forest, mountain, plain, sea |

| 10. | Large human-made outdoor things | Bridge, castle, house, road, skyscraper |

| 11. | Large carnivores | Bear, leopard, lion, tiger, wolf |

| 12. | Medium-sized mammals | Fox, porcupine, possum, raccoon, skunk |

| 13. | Large Omnivores and herbivores | Camel, cattle, chimpanzee, elephant, kangaroo |

| 14. | Non-insect invertebrates | Crab, lobster, snail, spider, worm |

| 15. | reptiles | Crocodile, dinosaur, lizards, snake, turtle |

| 16. | trees | Maple, oak, palm, pine, willow |

| 17. | people | girl, man, women, baby, boy |

| 18. | Small mammals | Hamster, rabbit, mouse, shrew, squirrel |

| 19. | Vehicles 1 | Bicycle, bus, motorcycle, pickup truck, train |

| 20. | Vehicles 2 | Lawn-mower, rocket, streetcar, tractor, tank |

Use-Case: Implementation of CIFAR10 with the help of Convolutional Neural Networks Using TensorFlow

Now, train a network to classify images from the CIFAR10 Dataset using a Convolution Neural Network built-in TensorFlow.

Consider the following Flowchart to understand the working of the use-case:

Img

Install Necessary packages:

Train The Network:

Output:

Epoch: 60/60 Global step: 23070 - [>-----------------------------] 0% - acc: 0.9531 - loss: 1.5081 - 7045.4 sample/sec Global step: 23080 - [>-----------------------------] 3% - acc: 0.9453 - loss: 1.5159 - 7147.6 sample/sec Global step: 23090 - [=>----------------------------] 5% - acc: 0.9844 - loss: 1.4764 - 7154.6 sample/sec Global step: 23100 - [==>---------------------------] 8% - acc: 0.9297 - loss: 1.5307 - 7104.4 sample/sec Global step: 23110 - [==>---------------------------] 10% - acc: 0.9141 - loss: 1.5462 - 7091.4 sample/sec Global step: 23120 - [===>--------------------------] 13% - acc: 0.9297 - loss: 1.5314 - 7162.9 sample/sec Global step: 23130 - [====>-------------------------] 15% - acc: 0.9297 - loss: 1.5307 - 7174.8 sample/sec Global step: 23140 - [=====>------------------------] 18% - acc: 0.9375 - loss: 1.5231 - 7140.0 sample/sec Global step: 23150 - [=====>------------------------] 20% - acc: 0.9297 - loss: 1.5301 - 7152.8 sample/sec Global step: 23160 - [======>-----------------------] 23% - acc: 0.9531 - loss: 1.5080 - 7112.3 sample/sec Global step: 23170 - [=======>----------------------] 26% - acc: 0.9609 - loss: 1.5000 - 7154.0 sample/sec Global step: 23180 - [========>---------------------] 28% - acc: 0.9531 - loss: 1.5074 - 6862.2 sample/sec Global step: 23190 - [========>---------------------] 31% - acc: 0.9609 - loss: 1.4993 - 7134.5 sample/sec Global step: 23200 - [=========>--------------------] 33% - acc: 0.9609 - loss: 1.4995 - 7166.0 sample/sec Global step: 23210 - [==========>-------------------] 36% - acc: 0.9375 - loss: 1.5231 - 7116.7 sample/sec Global step: 23220 - [===========>------------------] 38% - acc: 0.9453 - loss: 1.5153 - 7134.1 sample/sec Global step: 23230 - [===========>------------------] 41% - acc: 0.9375 - loss: 1.5233 - 7074.5 sample/sec Global step: 23240 - [============>-----------------] 43% - acc: 0.9219 - loss: 1.5387 - 7176.9 sample/sec Global step: 23250 - [=============>----------------] 46% - acc: 0.8828 - loss: 1.5769 - 7144.1 sample/sec Global step: 23260 - [==============>---------------] 49% - acc: 0.9219 - loss: 1.5383 - 7059.7 sample/sec Global step: 23270 - [==============>---------------] 51% - acc: 0.8984 - loss: 1.5618 - 6638.6 sample/sec Global step: 23280 - [===============>--------------] 54% - acc: 0.9453 - loss: 1.5151 - 7035.7 sample/sec Global step: 23290 - [================>-------------] 56% - acc: 0.9609 - loss: 1.4996 - 7129.0 sample/sec Global step: 23300 - [=================>------------] 59% - acc: 0.9609 - loss: 1.4997 - 7075.4 sample/sec Global step: 23310 - [=================>------------] 61% - acc: 0.8750 - loss:1.5842 - 7117.8 sample/sec Global step: 23320 - [==================>-----------] 64% - acc: 0.9141 - loss:1.5463 - 7157.2 sample/sec Global step: 23330 - [===================>----------] 66% - acc: 0.9062 - loss: 1.5549 - 7169.3 sample/sec Global step: 23340 - [====================>---------] 69% - acc: 0.9219 - loss: 1.5389 - 7164.4 sample/sec Global step: 23350 - [====================>---------] 72% - acc: 0.9609 - loss: 1.5002 - 7135.4 sample/sec Global step: 23360 - [=====================>--------] 74% - acc: 0.9766 - loss: 1.4842 - 7124.2 sample/sec Global step: 23370 - [======================>-------] 77% - acc: 0.9375 - loss: 1.5231 - 7168.5 sample/sec Global step: 23380 - [======================>-------] 79% - acc: 0.8906 - loss: 1.5695 - 7175.2 sample/sec Global step: 23390 - [=======================>------] 82% - acc: 0.9375 - loss: 1.5225 - 7132.1 sample/sec Global step: 23400 - [========================>-----] 84% - acc: 0.9844 - loss: 1.4768 - 7100.1 sample/sec Global step: 23410 - [=========================>----] 87% - acc: 0.9766 - loss: 1.4840 - 7172.0 sample/sec Global step: 23420 - [==========================>---] 90% - acc: 0.9062 - loss: 1.5542 - 7122.1 sample/sec Global step: 23430 - [==========================>---] 92% - acc: 0.9297 - loss: 1.5313 - 7145.3 sample/sec Global step: 23440 - [===========================>--] 95% - acc: 0.9297 - loss: 1.5301 - 7133.3 sample/sec Global step: 23450 - [============================>-] 97% - acc: 0.9375 - loss: 1.5231 - 7135.7 sample/sec Global step: 23460 - [=============================>] 100% - acc: 0.9250 - loss: 1.5362 - 10297.5 sample/sec Epoch 60 - accuracy: 78.81% (7881/10000) This epoch receive better accuracy: 78.81 > 78.78. Saving session... ##################################################################################################

Run Network on Test Dataset:

Simple Output

Trying to restore last checkpoint ... Restored checkpoint from: ./tensorboard/cifar-10-v1.0.0/-23460 Accuracy on Test-Set: 78.81% (7881 / 10000)

Training Time

Here, we see that how much time takes 60 epoch:

| Device | Batch | Time | Accuracy[%] |

|---|---|---|---|

| NVidia | 128 | 8m4s | 79.12 |

| Inteli77700HQ | 128 | 3h30m | 78.91 |