216

Cache Memory

The data or contents of the main memory that are used frequently by CPU are stored in the cache memory so that the processor can easily access that data in a shorter time. Whenever the CPU needs to access memory, it first checks the cache memory. If the data is not found in cache memory, then the CPU moves into the main memory.

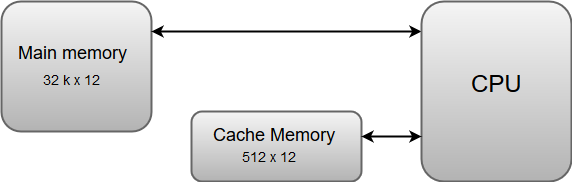

Cache memory is placed between the CPU and the main memory. The block diagram for a cache memory can be represented as:

The cache is the fastest component in the memory hierarchy and approaches the speed of CPU components.

The basic operation of a cache memory is as follows:

- When the CPU needs to access memory, the cache is examined. If the word is found in the cache, it is read from the fast memory.

- If the word addressed by the CPU is not found in the cache, the main memory is accessed to read the word.

- A block of words one just accessed is then transferred from main memory to cache memory. The block size may vary from one word (the one just accessed) to about 16 words adjacent to the one just accessed.

- The performance of the cache memory is frequently measured in terms of a quantity called hit ratio.

- When the CPU refers to memory and finds the word in cache, it is said to produce a hit.

- If the word is not found in the cache, it is in main memory and it counts as a miss.

- The ratio of the number of hits divided by the total CPU references to memory (hits plus misses) is the hit ratio.

Next Topic#