What are ETL Tools?

Extraction, transformation, and load help the organization to make the data accessible, meaningful, and usable across different data systems. An ETL tool is a software used to extract, transform, and loading the data.

In today’s data-driven world, a vast amount of data is generated from various organizations, machines, and gadgets irrespective of their size.

In the traditional way of programming, ETL all extracting and doing some transformation operations and then load the transformed data into target database file etc.

For this, there is a need to write the coding in any programming language, like Java, C#, C++, etc.

To avoid more coding and use of libraries will reduce our work effort by drag and drop components.

An ETL tool is a set of libraries written in any programming language which will simplify our work to make data integration and transformation operation for any need.

For example, in our mobile, each time we browse the web, some amount of data is generated. A commercial plane can produce up to 500 GB of data per hour. We can think now, how massive this data would be. This is the reason it is known as Big Data, but this data is useless until we perform the ETL operation on it.

Here, we are going through each of the ETL processes.

1. Extract: Extraction of data is the most crucial step of ETL, which involves accessing the data from all the storage systems. The storage system can be RDBMS, excel files, XML files, flat files, Indexed Sequential Access Method (ISAM), etc. The extraction is the most vital step; it needs to be designed in a way that it should not affect the source system. Extraction steps make sure that every item’s parameter is distinctively identified irrespective of its source system.

2. Transform: In Pipeline, transformation is the next process. In this step, aggregate data is analyzed and applied to the various functions on it to transform the data into the required format. Generally, methods are used for the transformation of the data are, conversion, filtering, sorting, standardizing, clearing the duplicates, translating, and verifying the consistency of various data sources.

3. Load: In the process of ETL, loading is the final stage. In this step, the processed data (extracted and transformed data) is loaded to a target data repository, which is the database. When performing this step, it should be ensured that the load function is performed accurately, but by utilizing minimal resources. We have to maintain the referential integrity at the time of loading so that consistency of the data is not loose. When the data is loaded, we can pick any chunk of data and can compare with other pieces easily.

All these operations are performed efficiently by any ETL tool.

The need for ETL tools

Data warehouse tool contains data occurred from distinct sources which are combined in one place to analyze meaningful patterns and insight. ETL processes the heterogeneous data and make it homogeneous, which work smoothly for data scientist. Then data analyst analyzes the data and derives the business intelligence from it.

ETL is easier and faster to use when compared to the traditional method of moving data which involve writing conventional computer programs. ETL tool contains a graphical interface which increases the process of mapping table and column between the source and the target databases.

ETL tools can collect, read, and migrate from multiple data structures and across different platforms like mainframe, server, etc. It can also identify “delta” changes as they occur, enables the ETL tools to copy only changed data without the need to perform full data refreshes.

ETL tools include ready to use operations like filtering, sorting, reformatting, merging, and joining. ETL tools also support transformation scheduling, monitoring, version control, and unified metadata management, while some of the tools integrated with BI tools.

Benefits of ETL tools

It is more beneficial to use ETL tools than using the conventional method of moving data from a source database to a target data repository.

Advantages of using ETL tools are:

Ease of use: The first and foremost advantage of an ETL tool is, it is easy to use. The tool itself specifies data sources and the rules for extracting and processing data, and then it implements the process and loads the data. ETL eliminates the need for coding in a programming sense, where we have to write the procedures and code.

Operational Resilience: Many of the data warehouses are damaged and create operational problems. ETL tools have built-in error handling functionality, and it helps data engineers to build the features of an ETL tool to develop a successful and well-instrumented system.

Visual Flow: ETL tools are based on Graphical User Interface and offer a visual flow of the system’s logic. The graphical interface helps us to specify rules using drag and drop interface to show the flow of data in a process.

Suitable for Complex Data Management Situations: ETL tools help better utility for moving large volumes of data and transferring them in batches. In the case of complex rules and transformation, ETL tools simplify the task, which assists us with the calculations, string manipulations, data changes, and integration of multiple sets of data.

Enhances Business Intelligence: ETL tools improve data accessing and simplify the process of extracting, transforming, and loading. It improves access to information that directly impacts the strategic and operational decision, which is based on data-driven facts. ETL also enables business leaders to retrieve data which is based on specific needs and take decisions according to them.

Advances Data Profiling and Cleansing: ETL tools have a vast set of cleansing functions as compared to the is available in SQL. The advanced function care to the complex transformation needs, which commonly occur in a structurally complex data warehouse.

(repeated) Enhanced Business Intelligence: ETL tools improve data access as it simplifies the process of extracting, transforming, and loading. ETL facilitates to access the information directly, which impacts the strategic and operational decisions, which is base on data-driven facts. ETL tools also enable business leaders to retrieve data based on their specific needs and will take decisions accordingly.

High Return on Investment: The use of ETL tools save cost, by enabling the business to generate higher revenue. From the study of International Data Corporation, it is found that these implementations collect the median 5-year ROI of 112 percent with a mean of payback of 1.6 years.

Performance: Structure of ETL platform simplifies the process of building a high-quality data warehousing system. Several ETL tools come with performance-enhancing technologies like cluster awareness and symmetric multiprocessing.

Types of ETL tools

ETL tools provide various functions to facilitate the workflow. With the increasing popularity of ETL tools, the data warehousing market has seen the importance of different emergence and commercial appliances.

There are multiple tools available these are:

- Talend Data Integration

- Informatica

- Kettle

- Clover ETL

Cloud-Based Tools are

- AWS Glue

- SnapLogic

- Informatica Cloud

- Alation

Some of the tools are:

- Informatica PowerCenter

- Business Objects Data Integrator

- IBM InfoSphere DataStage

- Microsoft SQL Server Integration Services

- Oracle Warehouse Builder/ Data Integrator

- Pentaho Data Integration(Open source)

- Jasper ETL(Open Source)

ETL tool function

ETL tool-based data warehouses use staging area, data integration, and access layer to perform its function. It’s a three-layered structure.

- Staging Layer: Staging database or staging layer is used to store the extracted data from different source data systems.

- Data Integration Layer: Integration Layer transforms the data from the staging layer and moves the data to a database. In the database, data is arranged into hierarchical groups which are called dimensions and into facts and aggregate facts. The combination of dimension tables and events in data warehouse systems is called a schema.

- Access Layer: Access Layer is used by end-users to retrieve the data for analytical reporting or functions.

Informatica PowerCenter Tool

Informatica is a software development company based in California. It extracts the data from a different data source, transform through the different intermediate system, and then loads.

Informatica is a data integration tool which is based on ETL architecture. It provides data integration software and service for various businesses, industries, and government organization, including healthcare, financial, insurance service, healthcare, etc.

To describe this, we will assume the SAP and ORACLE applications.

A company “XYZ” is using SAP application for its business transactions and process. A company “ABC” is using the Oracle for the same. Company “XYZ” owned company “ABC.” Now all the transaction of business, information, and raw data from the entire department will be transferred to company “XYZ.”

Among the many departments, we will take the HR department. If there are 2500 lacks employee associated with company “ABC”, and there is a need to transfer their accounts right from their emp ID to bank accounts with company “XYZ.” So we will use Informatica tool, as it has a data extraction tool which extracts employee information from company “ABC.” Informatica transforms it into a common database with common protocols set to transfer and then load on the company “XYZ” server.

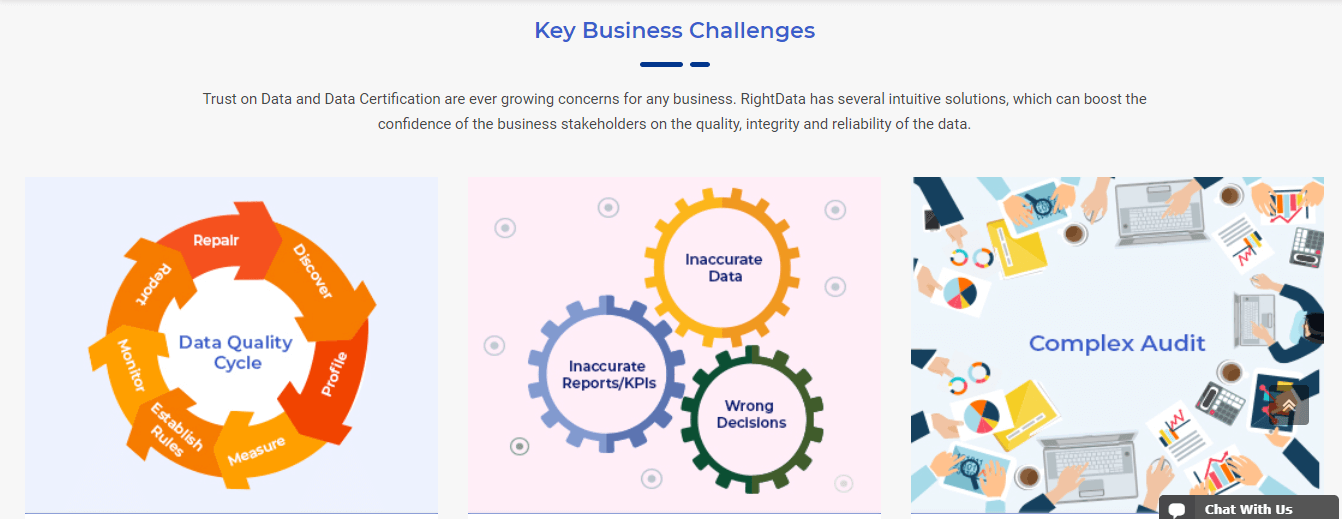

Right Data

RightData is a self-service ETL/Data integration testing tool. It is designed to help business and technology teams with the automation of data quality assurance and data quality control processes.

RightData’s interface allows users to validate and coordinate data between datasets regarding the difference in the data model or the type of data source.

RightData is designed to work efficiently for data platform with high complexity and huge volume.

Features:

- RighData is a powerful universal query studio. Here we can perform queries on any data source ( SAP, BIGDATA, FILES, RDBMS), explore metadata, analyze data, discover data by data profiling, business rule, and transformation validation.

- Using RightData we can perform field to field data compare with the data model, structure between source and target.

- RightData has a custom business rule builder and a set of validation rules

- To facilitate the technical data RightData has bulk comparison capacities. It coordinates across the project landscape.

- RighData’s two-way integration with CICD tools (Jenkins, Jira, BitBucket, etc.) assists our data team journey of DevOps to enable through DataOps.

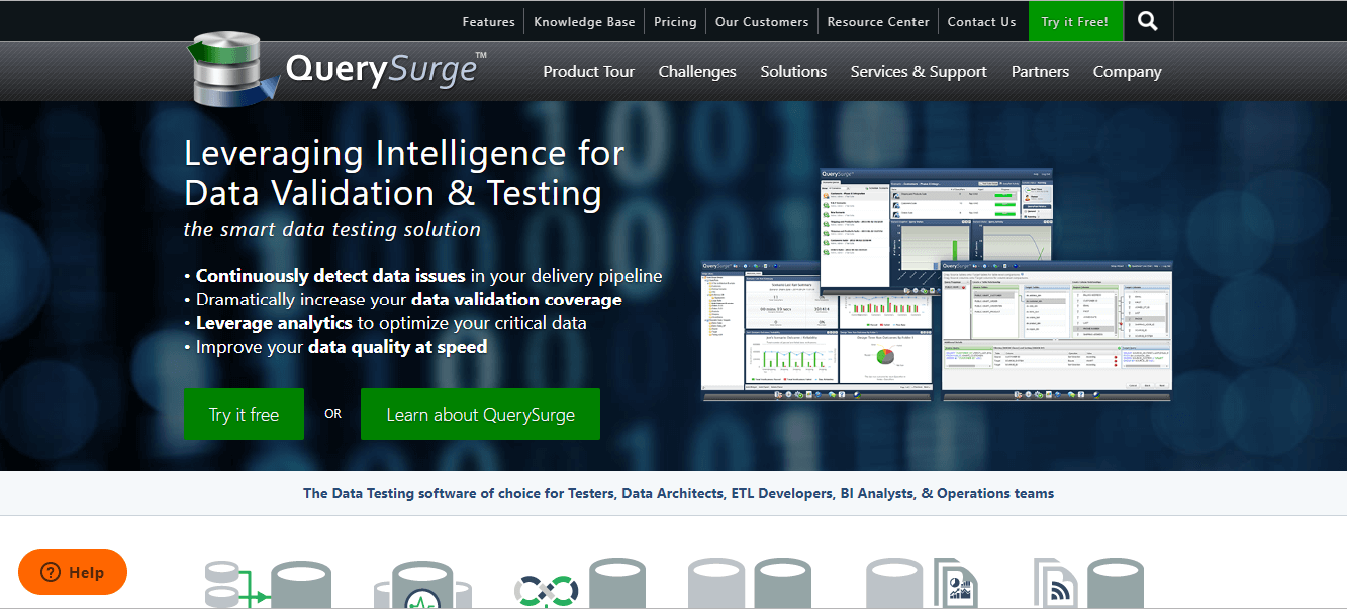

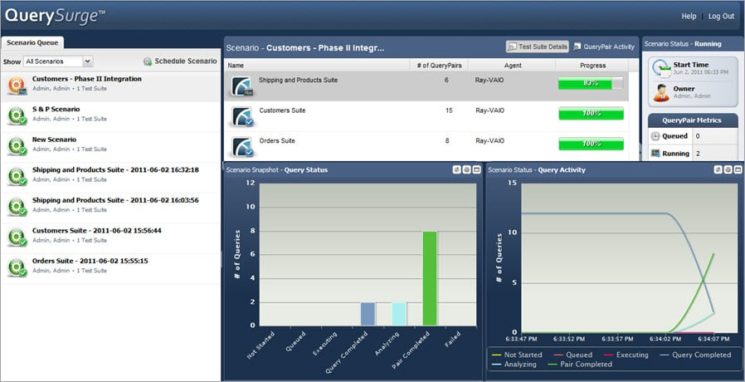

QuerySurge

QuerySurge tool is built for testing of Data Warehouse and Big Data. It also takes care that the data extracted and loaded from the source system to the destination system is correct, and it is as per the expected format. Any issues or differences can be identified very quickly with QuerySurge.

Features:

- QuerySurge is an automated tool for ETL Testing and Big Data testing. It improves the quality of data and accelerates testing cycles.

- It validates data using the Query Wizard.

- It saves time and cost by automating the manual efforts and schedule test for a particular time.

- QuerySurge supports ETL testing for various platforms like IBM, Oracle, Microsoft, etc.

- It helps to build test scenario and test suit along with configurable reports without the knowledge of SQL.

- Through an automated process, it generates the email.

- QuerySurge verifies, converts, and upgrades data through the ETL process.

- It is a commercial tool which connects source and upgrades the data through the ETL process.

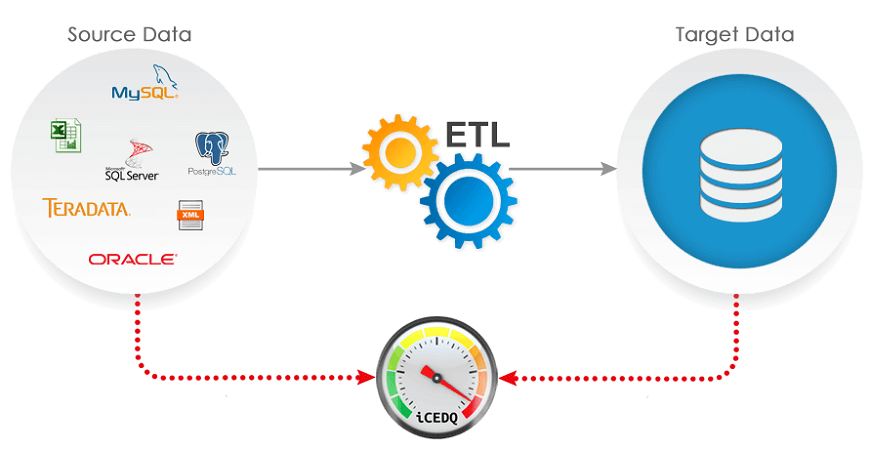

iCEDQ

icedq is an automated ETL testing tool. It is designed for the issues faced in the data-centric project like a warehouse, data migration, etc. icedq performs verification, validation, and coordination between the source and the systems. It ensures if the data is flawless after migration, and it avoids bad data to load into the target system.

Features:

- iCEDQ is a unique ETL testing tool when we compares millions of files and rows of data.

- It helps to identify the exact column and rows which contains data issue.

- It supports regression testing.

- It sends notifications and alerts to the subscribed users after execution.

- Icedq supports various databases and can read data from any database.

- Based on unique columns in the database, Icedq compares the data in memory.

- icedq identifies data integration errors without any custom code.

- It is a commercial tool with 30 days trial and provides the custom reports with alerts and notifications.

- icedq big data edition uses the power of the cluster.

QualiDI

QualiDI is an automated testing platform which offers end to end testing and ETL testing. It automates ETL testing and improves the effectiveness of ETL testing. It also reduces the testing cycle and improves data quality. QualiDI identifies bad data very quickly. QualiDI reduces regression cycle and data validation.

Features:

- QualiDI creates automated test cases, and it also provides support for comparing the automated data.

- It can be integrated with HPQC, Hadoop, etc.

- It supports email notifications.

- It supports continuous integration process.

- It helps to read the data and to trace the data.

- QualiDI manages complex BI testing cycle, eliminates human error, and data quality management.

Advantage

Advantages of QualiDI are given below:

- QualiDI enables Agile Development.

- It improves efficiency and saves cost.

- The advantage of QualiDI is it allows the traceability of the test cases.

- It also helps to reduce the defects.

- It helps in the integration process.

- It helps in the validation of the data.

- It also supports the continuous integration process.

Talend Open Studio for Data Integration

Talend open studio for integration is an open-source tool which makes ETL testing easier. It includes all ETL testing functionality and additional continuous delivery mechanism. With the help of Talend Data Integration Tool, the user can run the ETL jobs on the remote server with a variety of operating system.

ETL testing ensures that the data is transformed from the source system to the target system without any loss of data and follow the transformation rules.

Features:

- Talend Data Integration supports any relational Database, flat files, etc.

- Integrated GUI simplifies the designs and develops the ETL process.

- Talend supports remote job execution.

- With the help of Talend, we can identify defects at an early stage, which helps to reduce the cost.

- Talend can detect business ambiguity and consistency in transformation rule quickly.

- In Talend context, switching is possible.

- Talend can track the real-time data flow with detailed execution statistics.

TestBench

The TestBench is a database management and verification tool. It provides unique solution which addresses issues related to the database. Users manage data rollback, which improves accuracy and testing productivity. It also helps to reduce the environment downtime.

Features:

- TestBench maintains data confidentiality to protect the data.

- It improves knowledge about decision making.

- To improve test efficiency, it customizes the data.

- It helps to cover the maximum test coverage and helps to reduce time and money.

- In TestBench, the data privacy rules ensure that the live data is not available in the test environment.

- We can analyze the relationship between the tables with the help of TestBench and maintains the integrity between the tables.

DBFit

DBFit is an open-source testing tool. DBFit was released under the GPL license. It writes the unit and integration test for any database code. To maintain the test, DBFit is easy and can be executed from the browser directly. Tests are written using tables and performed using the command line or Java IDE. It supports the databases like Oracle, MySql, DB2, PostgreSQL, and SQL Server.

Data-Centric Testing

The data-centric Testing tool performs robust data validation to avoid data loss or data consistency during the transformation of data. It compares the data between the systems and ensures that the data loaded into the target system and it should match the source system in terms of data volume, format, data type, etc.

Features:

- This testing is build to perform Data Warehouse Testing and ETL testing.

- Data-Centric testing is the oldest and largest testing practice.

- It offers data migration, ETL testing, and coordination.

- Data-Centric Testing supports various relational database, flat files, etc.

- Data-Centric Testing also supports reporting.

Conclusion

ETL testing is not only the tester’s responsibility; it also involves developers, business analyst, database administrators (DBA) and users. ETL testing process became necessary as it is required to make a strategic decision at a regular time interval.

ETL testing is also known as enterprise testing because it requires good knowledge of SDLC, SQL queries, ETL procedures, etc.