Linux Memory Management

The subsystem of Linux memory management is responsible to manage the memory inside the system. It contains the implementation of demand paging and virtual memory.

Also, it contains memory allocation for user space programs and kernel internal structures. Linux memory management subsystem includes files mapping into the address space of the processes and several other things.

Linux memory management subsystem is a complicated system with several configurable settings. Almost every setting is available by the /proc filesystem and could be adjusted and acquired with sysctl. These types of APIs are specified inside the man 5 proc and Documentation for /proc/sys/vm/.

Linux memory management includes its jargon. Here we discuss in detail that how to understand several mechanisms of Linux memory management.

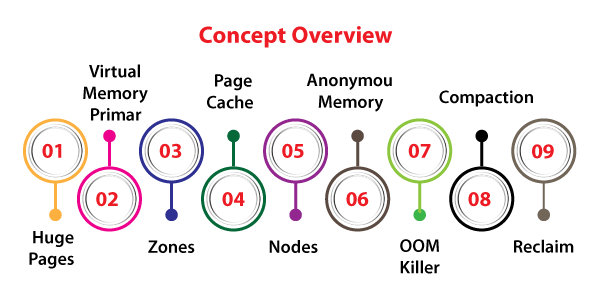

Concepts Overview

Linux memory management is a complicated system and included so many functionalities for supporting various system varieties through the MMU-less microcontrollers to the supercomputers.

For systems, the memory management without the MMU is known as nommu and it gains a dedicated document which will hopefully be written eventually. However, a few concepts are similar.

Here, we will assume that the MMU exists and the CPU can convert any virtual address into the physical address.

- Huge Pages

- Virtual Memory Primer

- Zones

- Page Cache

- Nodes

- Anonymous Memory

- OOM killer

- Compaction

- Reclaim

Huge Pages

The translation of addresses requires various memory accesses. These memory accesses are very slow as compared to the speed of the CPU. To ignore spending precious cycles of the processor on the translation of the address, CPUs manage the cache of these types of translations known as Translation Lookaside Buffer (TLB).

Virtual Memory Primer

In a computer system, physical memory is a restricted resource. The physical memory isn’t contiguous necessarily. It may be accessible as a group of different ranges of addresses. Besides, distinct architectures of CPU and implementations of similar architecture have distinct perspectives of how these types of ranges are specified.

It will make dealing with physical memory directly quite difficult and to ignore this complexity a mechanism virtual memory was specified.

The virtual memory separates the physical memory details through the application software.

It permits to keep of only required details inside the physical memory. It gives a mechanism for controlled data sharing and protection between processes.

Zones

Linux combines memory pages into some zones according to the possible usage. Let’s say, ZONE_HIGHMEM will include memory that isn’t mapped permanently into the address space of the kernel, ZONE_DMA will include memory that could be used by various devices for DMA, and ZONE_NORMAL will include addressed pages normally.

Page Cache

The common case to get data into memory is to read it through files as the physical memory is unstable.

The data will be put in the page cache to ignore expensive access of disk on the subsequent reads whenever any file is read.

Similarly, the data will be positioned inside the page cache and gets into the backing storage device whenever any file is written.

Nodes

Several multi-processor machines can be defined as the NUMA – Non-Uniform Memory Access systems. The memory is organized into banks that include distinct access latency relying on the “distance” through the processor in these types of systems. All the banks are known as a node and for all nodes, Linux creates a subsystem of independent memory management. A single node contains its zones set, list of used and free pages, and several statistics counters.

Anonymous Memory

The anonymous mapping or anonymous memory specifies memory that isn’t backed by any file system. These types of mappings are implicitly developed for heap and stack of the program or by explicitly calls to the mmap(2) system call.

The anonymous mappings usually only specify the areas of virtual memory that a program is permitted to access.

OOM killer

It is feasible that the kernel would not be able to reclaim sufficient memory and the loaded machine memory would be exhausted to proceed to implement.

Compaction

As the system executes, various tasks allocate the free up the memory space and it becomes partitioned. However, it is possible to restrict scattered physical pages with virtual memory. Memory compaction defines the partitioning problems.

Reclaim

According to the usage of the page, it is treated by Linux memory management differently. The pages that could be freed either due to they cache the details that existed elsewhere on a hard disk or due to they could be again swapped out to a hard disk, are known as reclaimable.

CMA Debugfs Interface

It is helpful for retrieving basic details out of the distinct areas of CMA and for testing release/allocation in all the areas.

All CMA zones specify a directory upon /CMA/ that is indexed via the CMA index of the kernel. Hence, the initial CMA zone will be:

The files structure made upon that directory is below:

- [RO] base_pfn: The base Page Format Number (or PFN) of the zone.

- [RO] order_per_bit: The sequence of the pages specified by a single bit.

- [RO] count: The memory amount inside the area of CMA.

- [RO] bitmap: The page bitmap inside the zone.

- [WO] alloc: Allocation of N pages from the area of CMA. For example:

HugeTLB Pages

Introduction

The goal of this file is to give a short overview of hugetlbpage support inside the Linux kernel. This type of support is created on the top of more than one support of the page size that is given by most of the latest architectures.

Let’s say, x86 CPUs support 2M and 4K page sizes normally, ia64 architecture provides support for more than one-page size 256M, 16M, 4M, 1M, 256K, 64K, 8K, 4K, and ppc64 provides support for 16M and 4K.

A TLB can be defined as the virtual-to-physical translation cache. It is typically a very scarce resource over a processor.

Various operating systems try to create the best use of a restricted number of TLB resources.

Now, this optimization is more complex as several GBs (physical memories) have more readily existed.

Users could use the support of a huge page inside the Linux kernel by either applying the classical SYSV shared memory system calls (shmat and shmget) or mmap system call.

Initially, the Linux kernel requires to be created with a file, i.e., CONFIG_HUGETLBFS and CONFIG_HUGETLB_PAGE configuration options.

The file, i.e., /proc/meminfo gives details of the total count of persistent hugetlb pages inside the huge page pool of the kernel.

Also, it shows huge page size (default) and details of the number of the surplus huge pages, reserved and free inside the huge page pool of default size.

The size of the huge page is required to generate the accurate size and alignment of the arguments for system calls that will help to map the regions of the huge page.

/proc/sys/vm/nr_hugepages file represents the count of “persistent” huge pages (current) in the huge page pool of kernel.

“Persistent” huge pages would be returned to the pool of huge pages if freed via a task. Dynamically, a user along with many root privileges can allocate or free a few persistent huge pages by decreasing or increasing the nr_hugepages value.

The pages that are utilized by huge pages can be reserved in the kernel and can’t be utilized for other objectives. Huge pages can’t be swapped out upon memory pressure.

Idle Page Tracking

Motivation

This features permits for tracking which memory page is being accessed via the workload.

This information could be helpful to estimate the working set size of the workload which in turn could be taken into consideration if configuring the parameters of workload, determining where to position the workload, or setting limits of memory cgroup in the computer cluster.

It can be enabled by using

User API

The API of idle page tracking is found at /sys/kernel/mm/page_idle. It currently combines /sys/kernel/mm/page_idle/bitmap and read-write file.

The file operates a bitmap in which all bits correspond to the memory page. This bitmap is defined by the 8-byte integers array and the page on PFN #i will be mapped to #i %64 bit of #i/64 array element (byte sequence is native). The related page is idle if the bit is fixed.

Implementation Details

Internally, the kernel keeps accesses records to the memory pages of the user for reclaiming unreferenced pages initially on memory shortage situations. A page will be examined referenced when it has been accessed recently by the address space of a process. The latter will happen if:

- A process of userspace writes or reads with a system call (e.g., read(3) or write (3))

- A page can be accessed via the device driver with the help of the get_user_pages()

- A page used to stirs filesystem buffers are written or read due to a process that requires the metadata of a filesystem stored in it (e.g., lists the directory tree)

Kernel Samepage Merging

Kernel samepage merging (or KSM) is a de-duplication memory-saving aspect. It is enabled by the CONFIG_KSM=y, included in 2.6.32 to the Linux kernel.

Originally, KSM was specified for using with KSM (in which it was called Kernel Shared Memory) by sharing the information common among them to fit other VMs into physical memory.

But, it could be helpful for an application that produces several instances of similar data.

The ksmd daemon of KSM periodically scans the user memory areas which are registered with it, checking for identical content pages which could be substituted by an individual write-protected page (copied automatically when any process later wishes for updating its content).

The page amounts that KSM daemon scans inside an individual pass and also the time among the passes can be configured with the help of sysfs interface.

Kernel samepage merging only merge private (anonymous) pages, never file (pagecache) pages. Originally, the merged pages of KSM were locked into the memory of the kernel, but now can be swapped out similarly to another user page.

Controlling KSM using madvise

KSM only implements on those address space areas which an application has suggested to be likely candidates to merge with the help of madvise(2) system call:

Then, the application might call:

Note: This call (unmerging) might suddenly need extra memory and possibly failing using EAGAIN.

KSM daemon sysfs interface

The daemon of KSM is managed by the sysfs file within the /sys/kernel/mm/ksm/ file and readable by each but writable by root only:

pages_to_scan

It determines how many pages for the scanning process before the ksmd daemon goes for sleeping.

For example,

Note: 100 is selected by default for demonstration objectives.

sleep_millisecs

It determines how many milliseconds the ksmd daemon must sleep before the next scan.

For example,

Note: 20 is selected by default for demonstration objectives.

run

It will set to 0 for stopping ksmd daemon from executing but continue mergers pages,

It will set to 1 for running ksmd daemon e.g.,

It will set to 2 for stopping ksmd daemon and unmerged every page merged currently, but left mergeable places registered for the next run.

max_page_sharing

The maximum sharing permitted for all KSM pages. It enforces a limit of duplication for avoiding a high latency for various operations of the virtual memory. It involves the virtual mapping traversal that distributes the KSM page.

The smallest value is 2 as the newly made KSM page would have two sharers at least. Decreasing this traversal defines there would be greater latency for various operations of virtual memory happening at the time of page migration, NUMA balancing, compaction, and swapping.

stable_node_chains_prune_millisecs

It describes how frequently KSM inspects the page metadata that shot the limit of duplication for stale details. The values of smaller millisecs would free up the metadata of KSM using lower latency.

However, they would make the ksmd daemon use more CPU at the time of the scan. It is a noop when not an individual page of KSM encounters the max_page_sharing yet.

The effectiveness of MADV_MERGEABLE and KSM is displayed in:

pages_shared

It defines how many pages (shared) are being utilized.

pages_sharing

It defines how many other sites are distributing them, i.e., how much is stored.

pages_unshared

It defines how many pages specific but checked to merge repeatedly.

full_scans

It defines how many times every mergeable area has been scanned.

pages_volatile

It defines how many pages modifying very fast to be positioned within a tree.

stable_node_chains

It defines the KSM page number that encounters the limit, i.e., max_page_sharing.

stable_node_dups

It defines KSM pages (duplicated) number.

A high ratio of pages_shared and pages_sharing represents better sharing. Besides, a high ratio of pages_sharing and pages_unshared represents wasted attempts.

pages_volatile grasps different types of activities. However, a high ratio will also represent a poor madvise MADV_MERGEABLE use.

The maximum possible ratio of pages_sharing/pages_shared is restricted via max_page_sharing tunable. For increasing the ratio max_page_sharing should be accordingly increased.

Memory Hotplug

This document describes the memory hotplug including its current status and how to use it. This text content would be changed often because still memory hotplug is under development.

Introduction

Memory hotplug objective

Memory hotplug permits users to increasingly decreasing the memory amount. There are generally two objectives:

(1) To change the memory amount. It is to permit an aspect such as capacity on the demand.

(2) To physically install or remove NUMA-nodes or DIMMs. It is for exchanging NUMA-nodes/DIMMs and reducing power consumption.

The first objective is needed via highly virtualized platforms and the second objective is needed via hardware. Also, the second objective supports the power management of memory.

Memory hotplug in Linux is developed for both objectives.

Memory hotplug phases

In memory hotplug, there are mainly two phases:

- The physical phase of memory hotplug

- The logical phase of memory hotplug

The physical phase is for communicating firmware or hardware and erase or make a platform for hotplugged memory. This phase is essential for the (2) objective, but it is a good phase to communicate among highly virtualized platforms as well.

The kernel will identify new memory, create new tables for memory management, and create sysfs files for operations of a new memory at the time memory is hotplugged.

If the firmware is supporting notification of a new memory connection to the operating system. This phase is automatically triggered. ACPI can alert this event. In case, it doesn’t alert this event, an operation called “probe” is used rather by the system administrators.

The logical phase is for changing the state into unavailable or available for users. Memory amount for the view of the user is modified by this phase. When a range of memory is available, the kernel enables each memory inside it as many free pages.

This phase is defined as online/offline in this document.

The logical phase is encountered by the sysfs file written by a system administrator. It should be run after the physical phase by hand for the hot-add case.

Memory online/offline task unit

Memory hotplug applies the model of SPARSEMEM memory which permits memory to be categorized into various chunks of similar size. These kinds of chunks are known as “sections”. A memory section size is architecture-dependent.

Memory sections are associated with chunks known as “memory blocks”. A memory block size illustrates the logical unit under which the operations of memory online/offline are to be implemented. It is also architecture-dependent.

A memory block has the same default size as the size of the memory section unless an architecture describes otherwise.

For determining a memory block size, consider the following file:

Configuration of Kernel

The kernel should be compiled using the below config options for using the features of memory hotplug:

For every memory hotplug:

- Permit for memory hot-add (CONFIG_MEMORY_HOTPLUG)

- Memory (CONFIG_SPARSEMEM)

- Memory model -> Sparse

Also, the following is necessary for enabling the removal of memory:

- Page Migration (CONFIG_MIGRATION)

- Permit for memory hot remove (CONFIG_MEMORY_HOTREMOVE)

Also, the following are necessary for memory hotpot of ACPI:

- This option could be a kernel module.

- Memory hotplug (upon Support menu of ACPI)

As the corresponding configuration, when our box contains a NUMA-node hotplug feature by ACPI, then this option is also necessary.

- PNP0A06, PNP0A05, and ACPI0004 Container Driver (CONFIG_ACPI_CONTAINER) (upon Support menu of ACPI).

- This option can also be a kernel module.

Memory hotplug sysfs files

Each memory block has its device details in sysfs files. All memory blocks are specified as follows:

where the id of a memory block is XXX.

It is assumed that every memory section is present in this range and no holes of memory present in this range for those memory blocks that are covered via the sysfs directory.

There is currently no way for determining if there is any memory hole. However, the presence of one memory hole should not impact the memory block hotplug compatibilities.

For example, think memory block size of 1GiB. A device for any memory beginning at 0x100000000 will be

This device will cover (0*100000000 … 0*140000000) address range

We can see five files under all memory blocks:

No-MMU support of memory support

The kernel includes limited support of memory mapping upon no-MMU situations. From the perspective of userspace, memory mapping uses conjunction along with mmap() system call, execve() system call, and shmat() call.

From the perspective of the kernel, execve mapping is performed via binfmt drivers. It calls back into the routines of mmap() for doing the original work.

Also, the behavior of memory mapping associates the way ptrace(), clone(), vfork(), and fork() work. There is no clone() and fork() should be supplied a CLONE_VM flag under uClinux.

The behavior is the same between the no-MMU and MMU cases but it is not identical. Also, it is much more limited in the letter conditions.

1. Anonymous mapping,

MAP_PRIVATE

- VM regions are backed by random pages (in the case of MMU).

- VM regions are backed by random contiguous executions of pages (in case of no-MMU).

2. Anonymous mapping,

MAP_SHARED

It works very much like the private mappings. Excluding they are shared around clone() and fork() without CLONE_VM within the MMU case. Since the behavior is interchangeable to the MAP_PRIVATE there and the no-MMU case does not support these.

3. File, !PROT_WRITE, PROT_READ/PROT_EXEC, MAP_PRIVATE

- VM regions are backed by those pages that are read from the file (in the case of MMU). The changes are reflected inside the mapping to an underlying file.

- In the case of no-MMU:

- The kernel re-uses the existing mapping for a similar part of the similar file when that contains compatible permissions, even when it was made by other processes if one present.

- The file mapping would be on a backing device directly if possible when it has proper mapping capabilities of protection and NOMMU_MAP_DIRECT capability. Mtd, cramfs, romfs, and ramfs might all allow it.

- File writes don’t impact the mapping, mapping writes are visible inside other processes, but must not happen.

4. File, PROT_WRITE, PROT_READ/PROT_EXEC, MAP_PRIVATE

- In the case of MMU: works like a non-PROT_WRITE case. Excluding the pages will be copied before write happens actually.

- In case of no-MMU: works like a non-PROT_WRITE case. Excluding, the copy will always be happened and never be shared.

5. File, PROT_READ/PROT_EXEC/PROT_WRITE, MAP_SHARED, file/blockdev

- In the case of MMU: VM regions are backed by pages read through the file; file writes reflected into the backing memory of pages; shared around a fork.

- In case of no-MMU: It is not supported.

6. PROT_READ/PROT_EXEC/PROT_WRITE, MAP_SHARED, memory backed blockdev

- In the case of MMU: As for regular files (ordinary).

- In case of no-MMU: As for various memory-backed regular files. However, the blockdev can give a contiguous page run without abbreviate being called. Besides, the ramdisk driver can do it when it allocated each memory as the contiguous array upfront.

7. PROT_READ/PROT_EXEC/PROT_WRITE, MAP_SHARED, memory backed regular file

- In the case of MMU: As for regular files (ordinary).

- In case of no-MMU: The filesystem giving the memory-backed file (like tmpfs or ramfs) might choose for honoring a mmap, truncate, open sequence by giving a contiguous pages sequence to map.

A mapping of shared-writable memory would be possible in that case. It would work as for the MMU case.

When the filesystem doesn’t give this kind of support, the request for mapping would be denied.

8. PROT_READ/PROT_EXEC/PROT_WRITE, MAP_SHARED, memory backed chardev

- In the case of MMU: As for regular files (ordinary).

- In the case of no-MMU: The character device driver might choose for honouring the mmap() by giving direct access to an underlying device when it gives quasi-memory or memory that could be directly accessed. When the driver doesn’t give this kind of support, the request for mapping would be denied.

Further key points on no-MMU memory

- A private mapping request might return a buffer that isn’t page-aligned. It is because the XIP might take a position and the data might not be paged aligned inside the backing store.

- For anonymous mapping, the memory that is allocated by the request would normally be freed up by kernel before being backed according to the man pages of Linux.

- For anonymous mapping, a request would be page-aligned always. The request size must be the power of two.

- A list of every mapping in use through a process is detectable by the /proc//maps inside the no-MMU mode.

- A list of every anonymous mapping and private copy over the system is detectable by the /proc/maps inside the no-MMU mode.