What is ETL?

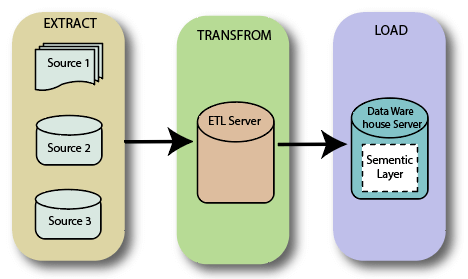

ETL stands for Extract Transform and Load. ETL combines all the three database function into one tool to fetch data from one database and place it into another database.

Extract: Extract is the process of fetching (reading) the information from the database. At this stage, data is collected from multiple or different types of sources.

Transform: Transform is the process of converting the extracted data from its previous form into the required form. Data can be placed into another database. Transformation can occur by using rules or lookup tables or by combining the data with other data.

Load: Load is the process of writing the data into the target database.

ETL is used to integrate the data with the help of three steps Extract, Transform, and Load, and it is used to blend the data from multiple sources. It is often used to build a data warehouse.

In the ETL process, data is extracted from the source system and convert into a format that can be examined and stored into a data warehouse or any other system. ETL is an alternate but a related approach which is designed to push processing down to database to improve the performance.

Example:

We are taking an example of a retail store which has different departments like sales, marketing, logistics, etc. Each of them is handling the customer’s information independently, and the way each department store the data is quite different. Sales department stored it by the customer’s name and marketing department store it by customer id. Now, if we want to check the history of the customer and want to know what the different products he/she bought owing to various campaigns; it would be very tedious.

The solution for this is to use a data warehouse to store information from different sources in a uniform structure using ETL. ETL tools extract the data from all these data sources and transform the data (like applying calculations, joining fields, removing incorrect data fields, etc.) and loads into a data warehouse. ETL can transform unique data sets into a unified structure. After that, we will use BI tools to find out the meaningful reports, dashboards, visualization from this data.

Need of ETL

There are many reasons the need for ETL is arising:

- ETL helps the companies to analyze their business data for making critical business decisions.

- Data warehouse provides a shared data repository.

- ETL provides a method of moving data from various sources into a data warehouse.

- As the data sources change, the data warehouse will automatically update.

- Well-designed and documented ETL system is essential for the success of the data warehouse project.

- Transactional databases cannot answer the complex business questions that can be solved by ETL.

- Well designed and documented ETL system is essential to the success of a data warehouse project.

- ETL process allows the sample data comparison between the source and target systems.

- ETL process can perform complex transformation and requires extra area to store the data.

- ETL helps to migrate the data into a data warehouse.

- ETL is a process which is defined earlier for accessing and manipulating source data into a target database.

- For business purpose, ETL offers deep historical context.

- It helps to improve productivity because it is codified and can be reused without a need for technical skills.

ETL working

Data is extracted from one or more sources and then copied to the data warehouse. When we are dealing with a large volume of data and multiple sources systems, data is consolidated. ETL is used to migrate data from one database to another database. ETL is the process which requires loading data to and from data marts and data warehouse. ETL is a process which is also used to transform data from one format to another type.

ETL Process in the data warehouse

We need to load our data warehouse regularly so that it can serve its purpose of facilitating business analysis. The data from one or more operational systems needs to be expected and copied into the data warehouse. The challenge in the data warehouse is to integrate and rearrange the large volume of data over many years. The process of extracting the data from source systems and bringing it into the data warehouse is commonly called ETL. The methodology and tasks of ETL are known for many years. Data has to share between applications or systems trying to integrate them.

ETL is a three steps process:

1. Extraction

In this step, data is extracted from the source system to the ETL server or staging area. Transformation is done in this area so that the performance of the source system is not degraded. If corrupted data is copied directly into the data warehouse from the source system, rollback will be a challenge over there. Staging area allows validation of the extracted data before it moves in the data warehouse.

There is a need to integrate the system in the data warehouse which has different DBMS, hardware, operating systems, and communication protocols. Here is a need for a logical data map before data is extracted and loaded physically. This data map describes all the relationship between the sources and the target data.

There are three methods to extract the data.

- FULL Extraction

- Partial extraction- without update notification

- Partial Extraction-With update notification

Whether we are using any extraction method, this should not affect the performance and response time of the source system. These source systems are live production system.

Validations during the extraction:

- Confirm record with the source data

- The data type should be checked

- It will check whether all the keys are in place or not

- We have to be sure that no spam/unwanted data is loaded

- Remove all kind of fragment and duplicate data.

2. Transformation

Extracted data from source server is raw and not usable in its original form. Therefore the data should be mapped, cleansed, and transformed. Transformation is an important step where the ETL process adds values and change the data, such as the BI reports, can be generated.

In this step, we apply a set of functions on extracted data. Data that does not require any transformation is called direct move or pass-through data.

In this step, we can apply customized operations on data. For example, the first name and the last name in a table are in a different column, it is possible to concatenate them before loading.

Validation during the Transformation:

- Filtering: For loading select only specific columns

- Character set conversion and encoding handling

- Data threshold and validation check

- For example, Age cannot be more than two digits

- The required field should not be left blank.

- Transpose the rows and columns.

- To merge the data use lookup

3. Loading

Loading the data into the data warehouse is the last step of the ETL process. The vast volume of data needs to load into the data warehouse for a concise time. For increasing the performance, loading should be optimized.

If the loading fails, the recover mechanism should be there to restart from the point of the failure without data integrity loss. Admin of data warehouse needs to monitor, resume, and cancel loads as per server performance.

Types of Loading

- Initial Load – Full the entire data warehouse table

- Incremental Load- Apply changes when needed.

- Full Refresh- Erase the content of one or more tables and reloading with new data.

Wrap UP

- ETL is known as Extraction, Load, and Transform.

- ETL provides the method of moving the data from various sources into a data warehouse.

- The first step includes the extraction of data from the source system into the staging area.

- Transformation step includes the extracted data from the source is cleansed and transformed.

- Loading the data into the data warehouse is the last step of the ETL process.