Approaches to AI Learning

An algorithm is a kind of container, and it provides a box for storing a method to solve a particular kind of problem. Algorithms process data through a series of well-defined states. States do not need to be deterministic, but states are defined nonetheless. The goal is to create an output that solves a problem. The algorithm receives input that helps define the output in some cases, but the focus is always on the output.

Algorithms must express transitions between states using a well-defined and formal language that the computer can understand. In processing data and solving a problem, the algorithm defines, refines, and performs a function. The function is always specific to the type of problem being addressed by the algorithm.

Each of the five tribes has a different technique and strategy for solving those problems resulting in unique algorithms. The combination of these algorithms should eventually lead to the master algorithm, which will solve any problem. The following discussion provides an overview of the five main algorithmic techniques.

1: Symbolic logic

One of the ancient tribes, the Symbolists, believed that knowledge could be gained by working on symbols (signs that stand for a certain meaning or event) and drawing rules from them.

2: Symbolic reasoning

One of the earliest tribes, the symbolists, believed that knowledge could be obtained by operating on symbols (signs that stand for a certain meaning or event) and deriving rules from them.

By putting together complex rules systems, you could attain a logical deduction of the result you wanted to know; thus, the symbolists shaped their algorithms to produce rules from data. In symbolic logic, deduction expands the scope of human knowledge, while induction increases the level of human knowledge. Induction usually opens up new areas of exploration, whereas deduction explores those areas.

3: The connections are based on the neurons of the brain.

The Connectionists are perhaps the most famous of the five tribes. This tribe attempts to reproduce brain functions by using silicon instead of neurons. Essentially, each of the neurons (built as an algorithm that models the real-world counterpart) solves a small piece of the problem, and using multiple neurons in parallel solves the problem as a whole.

The goal is to keep changing the weights and biases until the actual output matches the target output. The artificial neuron fires up and transmits its solution to the next neuron in line. The solution produced by just one neuron is a part of the whole solution. Each neuron sends information to the next neuron until the neurons make up the final output. Such a method proved most effective in human-like tasks such as recognizing objects, understanding written and spoken language and interacting with humans.

4: Evolutionary algorithms that test variation

The revolutionaries relied on the principles of evolution to solve problems. In other words, this strategy is based on the existence of the fittest (removing any solutions that do not match the desired output). A fitness function determines the feasibility of each function in solving a problem. Using a tree structure, the solution method finds the best solution based on the function output. The winner of each level of development has to create tasks for the next level.

The idea is that the next level will get closer to solving the problem but may not solve it completely, which means that another level is needed. This particular tribe relies heavily on recursion and languages that strongly support recursion to solve problems. An interesting output of this strategy has been algorithms that evolve: one generation of algorithms creates the next generation.

5: Bayesian Approximation

A group of Bayesian scientists recognized that uncertainty was the dominant aspect of the view. Learning was not assured but rather occurred as a continuous update of previous assumptions that became more accurate. This notion inspired Bayesians to adopt statistical methods and, in particular, derivations from Bayes’ theorem, which help you calculate probabilities in specific situations (for example, by looking at a card of a certain seed, pseudo -The starting value for a random sequence, after three other cards of the same seed are drawn from a deck).

6: Systems that learn by analogy

Analysts use kernel machines to recognize patterns in the data. By recognizing the pattern of a set of inputs and comparing it to known outputs, you can create a problem solution. The goal is to use equality to determine the best solution to a problem. It is the kind of reasoning that determines whether a particular solution was used in a particular situation at a prior time. Using that solution for similar situations should also work.

One of the most recognizable outputs of this tribe is the recommendation system. For example, when you buy a product on Amazon, the recommendation system comes up with other related products that you might want to buy.

The ultimate goal of machine learning is to combine the techniques and strategies adopted by the five tribes to form a single master algorithm that can learn anything. Of course, achieving that goal is a long way off, yet scientists like Pedro Domingos are currently working toward that goal.

What are the Different Types of Artificial Intelligence Approaches?

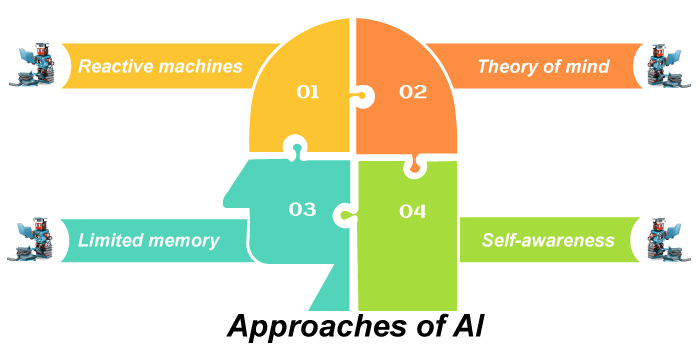

While everything seems green and sunny to a non-specialist, there is a lot of technology to build AI systems. There are four types of artificial intelligence approaches based on how machines behave – reactive machines, limited memory, theory of mind, and self-awareness.

1. Reactive machines

These machines are the most basic form of AI applications. Examples of reactive machines are Deep Blue, IBM’s chess-playing supercomputer, and the same computer that defeated the then-grand master of the world.

AI teams do not use training sets to feed the machines or store subsequent data for future references. Based on the move made by the opponent, the machine decides/predicts the next move.

2. Limited memory

These machines belong to the Category II category of AI applications, and Self-propelled cars are the perfect example. Over time, these machines are fed with data and trained on the speed and direction of other cars, lane markings, traffic lights, curves of roads, and other important factors.

3. Theory of mind

It is where we are struggling to make this concept work. However, we are not there yet. Theory of mind is the concept where bots will understand and react to human emotions, thoughts.

If AI-powered machines are always mingling and moving around with us, then understanding human behavior is imperative. And then, it is necessary to react to such behaviors accordingly.

4. Self-awareness

These machines are an extension of class III type AI, and it is a step ahead of understanding human emotions. It is the stage where AI teams build machines with self-awareness factors programmed into them.

When someone is honking the horn from behind, the machines must sense the emotion, and only then do they understand what it feels like when they horn someone from behind.