Architecture of Neural Networks

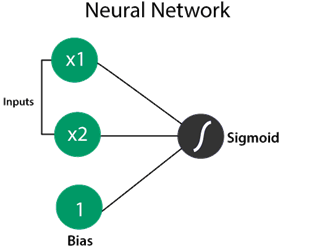

We found a non-linear model by combining two linear models with some equation, weight, bias, and sigmoid function. Let start its better illustration and understand the architecture of Neural Network and Deep Neural Network.

Let see an example for better understanding and illustration.

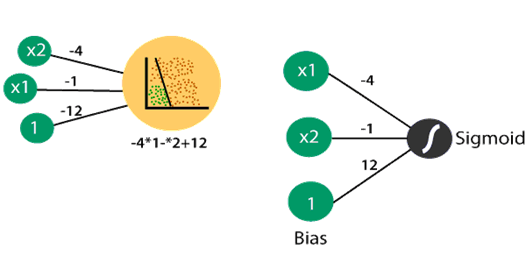

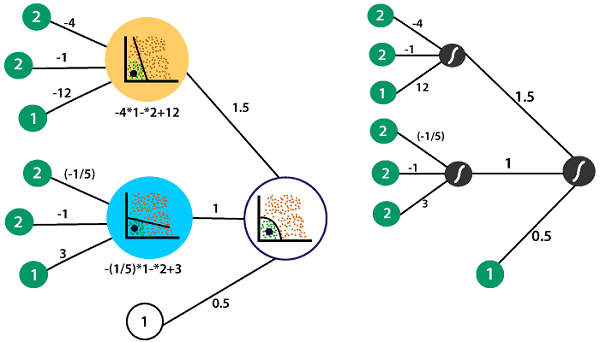

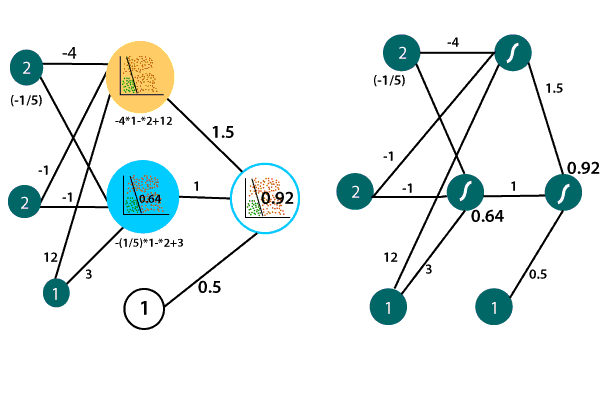

Suppose, there is a linear model whose line is represented as-4x1-x2+12. We can represent it with the following perceptron.

The weight in the input layer is -4, -1 and 12 represent the equation in the linear model to which input is passed in to obtain their probability of being in the positive region. Take one more model whose line is represented as-

Now, what we have to do, we will combine these two perceptrons to obtain a non-linear perceptron or model by multiplying the two models with some set of weight and adding biased. After that, we applied sigmoid to obtain the curve as follows:

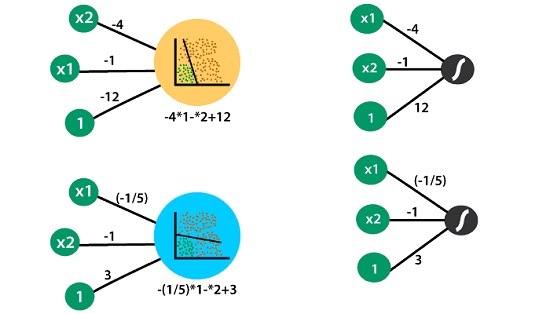

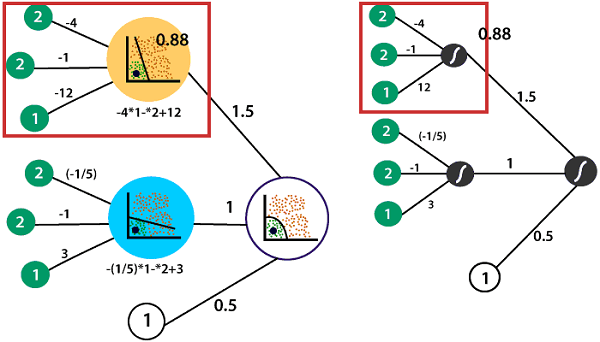

In our previous example, suppose we had two inputs x1 and x2. These inputs represent a single point at coordinates (2, 2), and we want to obtain the probability of the point being in the positive region and the non-linear model. These coordinates (2, 2) passed into the first input layer, which consists of two linear models.

The two inputs are processed in the first linear model to obtain the probability of the point being in the positive region by taking the inputs as a linear combination based on weights and bias of the model and then taking the sigmoid and obtain the probability of point 0.88.

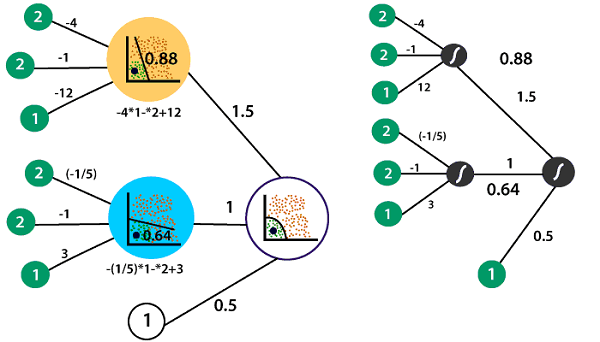

In the same way, we will find the probability of the point is in the positive region in the second model, and we found the probability of point 0.64.

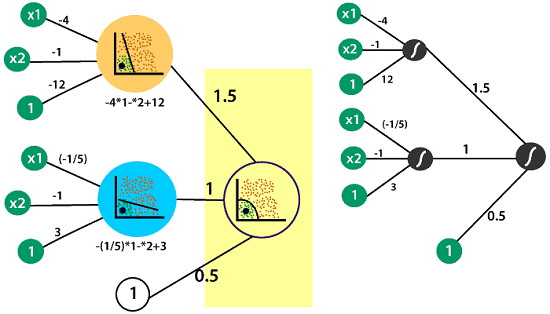

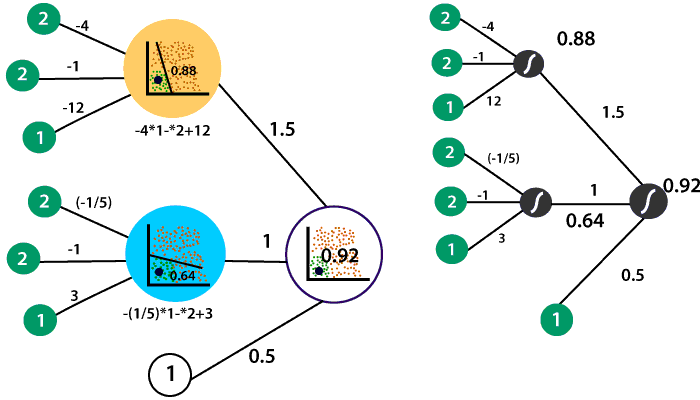

When we combine both models, we will add the probabilities together. We will take the linear combination with respect to weights 1.5, 1, and bias value 0.5. We will multiply the first model with the first weight and the second model with a second weight and adding everything along with the bias to obtain the score since we will take sigmoid of the linear combination of both our models which obtain a new model. We will do the same thing for our points, which converts it to a 0.92 probability of it being in the positive region and the non-linear model.

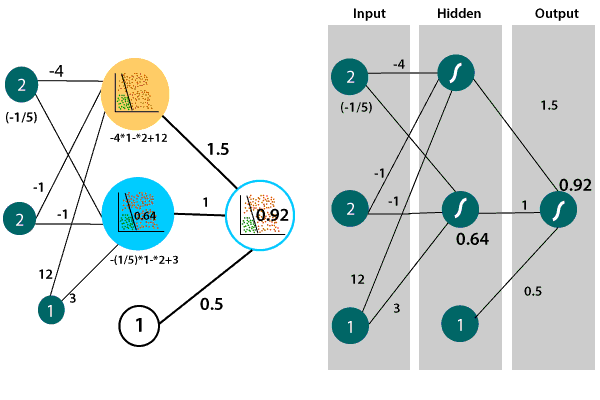

It is a feed forward process of deep neural network. For more efficiency, we can rearrange the notation of this neural network. Instead of representing our point as two distinct x1 and x2 input node we represent it as a single pair of the x1 and x2 node as

This illustrates the unique architecture of a neural network. So there is an input layer which contains the input, the second layer which is set of the linear model and the last layer is the output layer which resulted from the combination of our two linear models to obtain a non-linear model.

Deep Neural Network

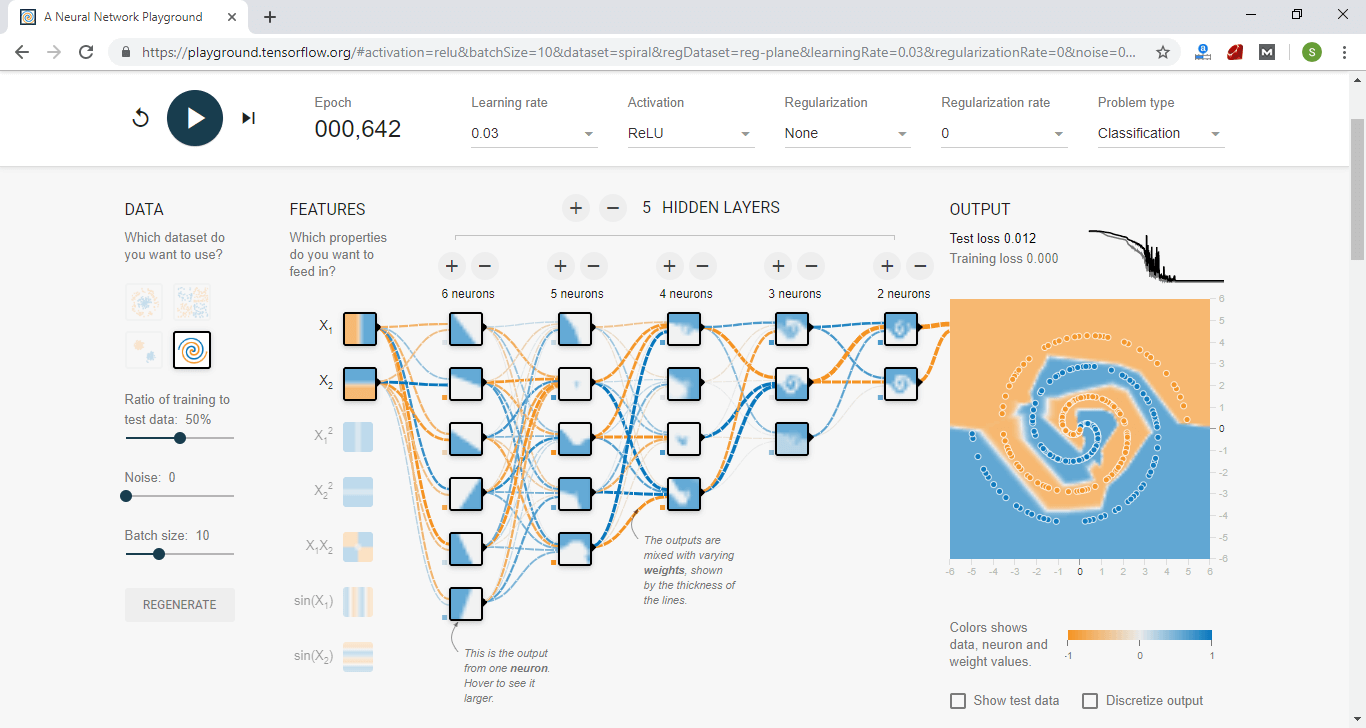

We will use the models and the hidden layers to combine them and create non-linear models which best classify our data. Sometimes our data is too complex and to classify that we will have to combine non-linear models to create even more non-linear model.

We can do this many times with even more hidden layers and obtain highly complex models as

To classify this type of data is more complex. It requires many hidden layers of models combining into one another with some set of weight to obtain a model that perfectly classify this data.

After that, we can produce some output through a feed-forward operation. The input would have to go through the entire depth of the neural network before producing an output. It is just a multilayered perceptron. In a deep neural network, our data’s trend is not straight forward, so this non-linear boundary is only an accurate model that correctly classifies a very complex set of data.

Many hidden layers are required to obtain this non-linear boundary and each layer containing models which are combined into one another to produce this very complex boundary which classifies our data.

The deep neural networks can be trained with more complex function to classify even more complex data.