What is Boosting in Data Mining?

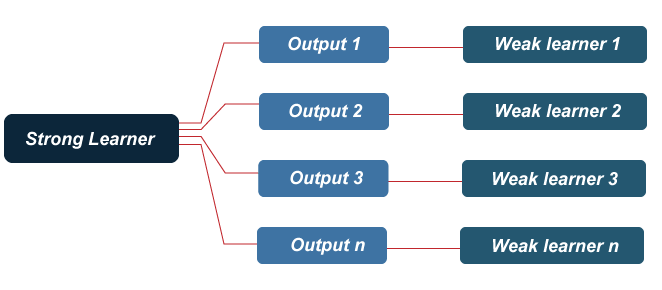

Boosting is an ensemble learning method that combines a set of weak learners into strong learners to minimize training errors. In boosting, a random sample of data is selected, fitted with a model, and then trained sequentially. That is, each model tries to compensate for the weaknesses of its predecessor. Each classifier’s weak rules are combined with each iteration to form one strict prediction rule.

Boosting is an efficient algorithm that converts a weak learner into a strong learner. They use the concept of the weak learner and strong learner conversation through the weighted average values and higher votes values for prediction. These algorithms use decision stamp and margin maximizing classification for processing.

There are three types of Algorithms available such as AdaBoost or Adaptive boosting Algorithm, Gradient, and XG Boosting algorithm. These are the machine learning algorithms that follow the process of training for predicting and fine-tuning the result.

Example

Let’s understand this concept with the help of the following example. Let’s take the example of the email. How will you recognize your email, whether it is spam or not? You can recognize it by the following conditions:

- If an email contains lots of sources, that means it is spam.

- If an email contains only one file image, then it is spam.

- If an email contains the message “You Own a lottery of $xxxxx,” it is spam.

- If an email contains some known source, then it is not spam.

- If it contains the official domain like educba.com, etc., it is not spam.

The rules mentioned above are not that powerful to recognize spam or not; hence these rules are called weak learners.

To convert weak learners to the strong learner, combine the prediction of the weak learner using the following methods.

- Using average or weighted average.

- Consider prediction has a higher vote.

Consider the 5 rules mentioned above; there are 3 votes for spam and 2 votes for not spam. Since there is high vote spam, we consider it spam.

Why is Boosting Used?

To solve complicated problems, we require more advanced techniques. Suppose that, given a data set of images containing images of cats and dogs, you were asked to build a model that can classify these images into two separate classes. Like every other person, you will start by identifying the images by using some rules given below:

- The image has pointy ears: Cat

- The image has cat-shaped eyes: Cat

- The image has bigger limbs: Dog

- The image has sharpened claws: Cat

- The image has a wider mouth structure: Dog

These rules help us identify whether an image is a Dog or a cat. However, the prediction would be flawed if we were to classify an image based on an individual (single) rule. These rules are called weak learners because these rules are not strong enough to classify an image as a cat or dog.

Therefore, to ensure our prediction is more accurate, we can combine the prediction from these weak learners by using the majority rule or weighted average. This makes a strong learner model.

In the above example, we have defined 5 weak learners, and the majority of these rules (i.e., 3 out of 5 learners predict the image as a cat) give us the prediction that the image is a cat. Therefore, our final output is a cat.

How does the Boosting Algorithm Work?

The basic principle behind the working of the boosting algorithm is to generate multiple weak learners and combine their predictions to form one strict rule. These weak rules are generated by applying base Machine Learning algorithms on different distributions of the data set. These algorithms generate weak rules for each iteration. After multiple iterations, the weak learners are combined to form a strong learner that will predict a more accurate outcome.

Here’s how the algorithm works:

Step 1: The base algorithm reads the data and assigns equal weight to each sample observation.

Step 2: False predictions made by the base learner are identified. In the next iteration, these false predictions are assigned to the next base learner with a higher weightage on these incorrect predictions.

Step 3: Repeat step 2 until the algorithm can correctly classify the output.

Therefore, the main aim of Boosting is to focus more on miss-classified predictions.

Types of Boosting

Boosting methods are focused on iteratively combining weak learners to build a strong learner that can predict more accurate outcomes. As a reminder, a weak learner classifies data slightly better than random guessing. This approach can provide robust prediction problem results, outperform neural networks, and support vector machines for tasks.

Boosting algorithms can differ in how they create and aggregate weak learners during the sequential process. Three popular types of boosting methods include:

1. Adaptive boosting or AdaBoost: This method operates iteratively, identifying misclassified data points and adjusting their weights to minimize the training error. The model continues to optimize sequentially until it yields the strongest predictor.

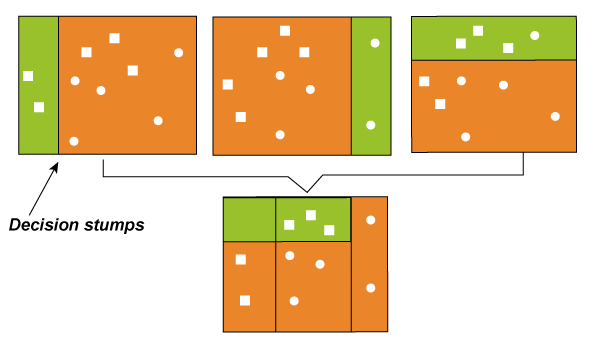

AdaBoost is implemented by combining several weak learners into a single strong learner. The weak learners in AdaBoost take into account a single input feature and draw out a single split decision tree called the decision stump. Each observation is weighted equally while drawing out the first decision stump.

The results from the first decision stump are analyzed, and if any observations are wrongfully classified, they are assigned higher weights. A new decision stump is drawn by considering the higher-weight observations as more significant. Again if any observations are misclassified, they’re given a higher weight, and this process continues until all the observations fall into the right class.

AdaBoost can be used for both classification and regression-based problems. However, it is more commonly used for classification purposes.

2. Gradient Boosting: Gradient Boosting is also based on sequential ensemble learning. Here the base learners are generated sequentially so that the present base learner is always more effective than the previous one, i.e., and the overall model improves sequentially with each iteration.

The difference in this boosting type is that the weights for misclassified outcomes are not incremented. Instead, the Gradient Boosting method tries to optimize the loss function of the previous learner by adding a new model that adds weak learners to reduce the loss function.

The main idea here is to overcome the errors in the previous learner’s predictions. This boosting has three main components:

- Loss function:The use of the loss function depends on the type of problem. The advantage of gradient boosting is that there is no need for a new boosting algorithm for each loss function.

- Weak learner:In gradient boosting, decision trees are used as a weak learners. A regression tree is used to give true values, which can combine to create correct predictions. Like in the AdaBoost algorithm, small trees with a single split are used, i.e., decision stump. Larger trees are used for large levels,e, 4-8.

- Additive Model: Trees are added one at a time in this model. Existing trees remain the same. During the addition of trees, gradient descent is used to minimize the loss function.

Like AdaBoost, Gradient Boosting can also be used for classification and regression problems.

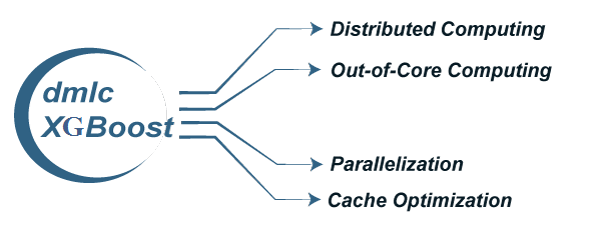

3. Extreme gradient boosting or XGBoost: XGBoost is an advanced gradient boosting method. XGBoost, developed by Tianqi Chen, falls under the Distributed Machine Learning Community (DMLC) category.

The main aim of this algorithm is to increase the speed and efficiency of computation. The Gradient Descent Boosting algorithm computes the output slower since they sequentially analyze the data set. Therefore XGBoost is used to boost or extremely boost the model’s performance.

XGBoost is designed to focus on computational speed and model efficiency. The main features provided by XGBoost are:

- Parallel Processing: XG Boost provides Parallel Processing for tree construction which uses CPU cores while training.

- Cross-Validation: XG Boost enables users to run cross-validation of the boosting process at each iteration, making it easy to get the exact optimum number of boosting iterations in one run.

- Cache Optimization: It provides Cache Optimization of the algorithms for higher execution speed.

- Distributed Computing: For training large models, XG Boost allows Distributed Computing.

Benefits and Challenges of Boosting

The boosting method presents many advantages and challenges for classification or regression problems. The benefits of boosting include:

- Ease of Implementation: Boosting can be used with several hyper-parameter tuning options to improve fitting. No data preprocessing is required, and boosting algorithms have built-in routines to handle missing data. In Python, the sci-kit-learn library of ensemble methods makes it easy to implement the popular boosting methods, including AdaBoost, XGBoost, etc.

- Reduction of bias: Boosting algorithms combine multiple weak learners in a sequential method, iteratively improving upon observations. This approach can help to reduce high bias, commonly seen in shallow decision trees and logistic regression models.

- Computational Efficiency: Since boosting algorithms have special features that increase their predictive power during training, it can help reduce dimensionality and increase computational efficiency.

And the challenges of boosting include:

- Overfitting: There’s some dispute in the research around whether or not boosting can help reduce overfitting or make it worse. We include it under challenges because in the instances that it does occur, predictions cannot be generalized to new datasets.

- Intense computation: Sequential training in boosting is hard to scale up. Since each estimator is built on its predecessors, boosting models can be computationally expensive, although XGBoost seeks to address scalability issues in other boosting methods. Boosting algorithms can be slower to train when compared to bagging, as a large number of parameters can also influence the model’s behavior.

- Vulnerability to outlier data: Boosting models are vulnerable to outliers or data values that are different from the rest of the dataset. Because each model attempts to correct the faults of its predecessor, outliers can skew results significantly.

- Real-time implementation: You might find it challenging to use boosting for real-time implementation because the algorithm is more complex than other processes. Boosting methods have high adaptability, so you can use various model parameters that immediately affect the model’s performance.

Applications of Boosting

Boosting algorithms are well suited for artificial intelligence projects across a broad range of industries, including:

- Healthcare: Boosting is used to lower errors in medical data predictions, such as predicting cardiovascular risk factors and cancer patient survival rates. For example, research shows that ensemble methods significantly improve the accuracy in identifying patients who could benefit from preventive treatment of cardiovascular disease while avoiding unnecessary treatment of others. Likewise, another study found that applying boosting to multiple genomics platforms can improve the prediction of cancer survival time.

- IT: Gradient boosted regression trees are used in search engines for page rankings, while the Viola-Jones boosting algorithm is used for image retrieval. As noted by Cornell, boosted classifiers allow the computations to be stopped sooner when it’s clear which direction a prediction is headed. A search engine can stop evaluating lower-ranked pages, while image scanners will only consider images containing the desired object.

- Finance: Boosting is used with deep learning models to automate critical tasks, including fraud detection, pricing analysis, and more. For example, boosting methods in credit card fraud detection and financial product pricing analysis improves the accuracy of analyzing massive data sets to minimize financial losses.