Kibana Loading Sample data

In this section, we are going to learn how to load sample data in the Kibana. Alongwith this, we will also learn about how to load data in Kibana using the Dev tool, load data using the Elasticsearch and Logstash, using dev tool to insert the bulk data.

We’ve seen how to upload the data to Elasticsearch from Logstash. In our Kibana interface, we are going to upload the data with the help of the Logstash and the Elasticsearch. Later in this section, we will hear about the data that has date, longitude, and latitude fields that we need to use. Also, if we don’t have a CSV file, we will see how to upload data directly to Kibana.

Use of the Logstash upload method for Elasticsearch field data in Kibana

The data that we are going to use will be in the form of the CSV file format. We have taken the data from the popular data repository website Kaggle.com. From here, many of the researchers and the analyst take data to form the research purpose.

The medical visits to use the data home here were picked up from the Kaggle.com website.

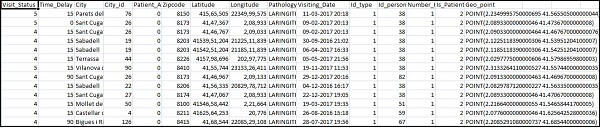

The following are the CSV-file fields available:

The Home visits.csv is like this:

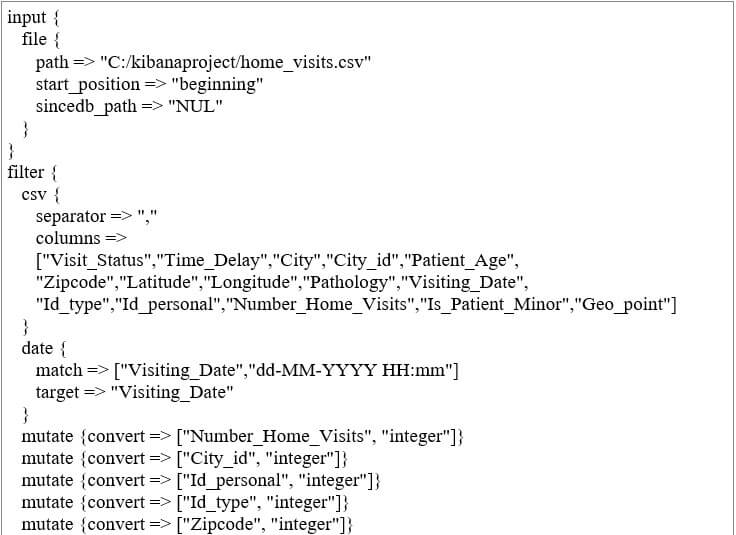

The following is the Logstash conf file to be used:

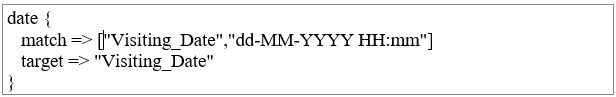

By default, in Elasticsearch, Logstash regards everything to be uploaded as a string. In case the date field of the CSV file that we need does not have the desired data format, then one can perform the following given code to get the desired date format.

For date field:

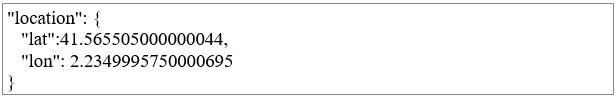

In the case of geo location, Elasticsearch has the same understanding as:

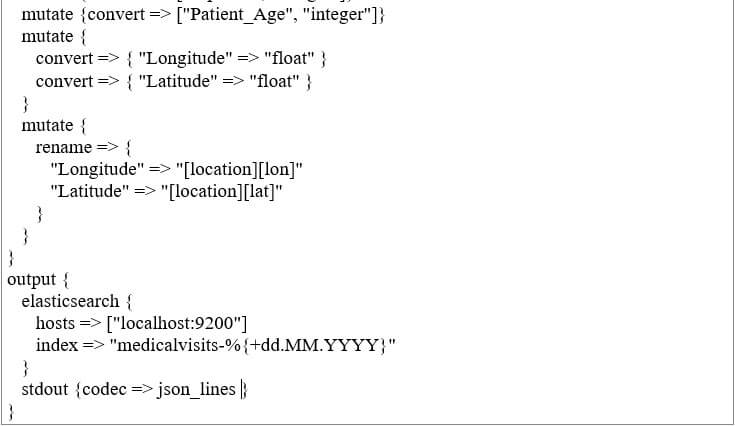

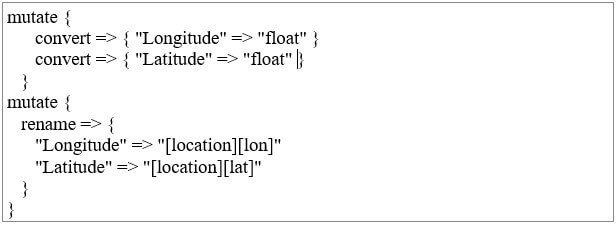

And we need to make sure that we need it in the same format of Elasticsearch for Longitude as well as for Latitude. So, for that first, we need to transform the longitude and latitude into the float data type.

Now we must rename it so that it can be made usable as part of the lat and lon position json set. See the code below for the reference:

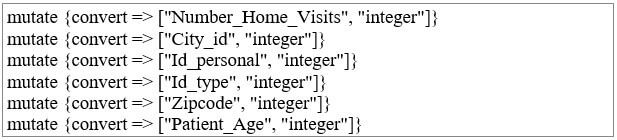

Using the following code for converting fields to integers:

After you have taken care of the fields, run the following command to upload data to Elasticsearch:

Go to the Logstash bin directory, and run the command below.

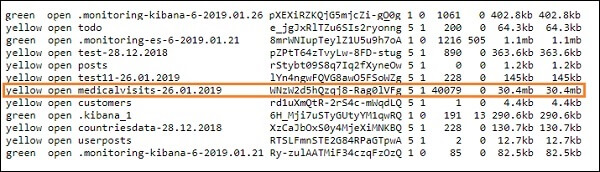

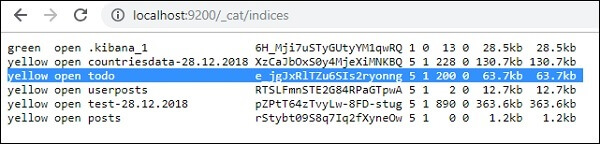

When done, you can see the index in Elasticsearch, as shown below in the Logstash conf file.

Now we can create an index pattern on the index uploaded above and use it to create visualization further.

Using Dev Tools to Upload Bulk Data

We will use Kibana UI Dev Tools. The Dev Tools in the Kibana is very helpful in uploading data into the Elasticsearch, even without using Logstash in our Kibana. With the help of the dev tool in the Kibana, we can post, delete, put, and also search the data in the Kibana.

Let’s take the JSON data from the URL below and upload the same in Kibana. In the same way, you can try loading some sample json data inside Kibana.

We can use the following code for our reference:

The json code to be used with Kibana has to be with indexed as follows:

Note that there is an additional data that goes in the JSON file that is:

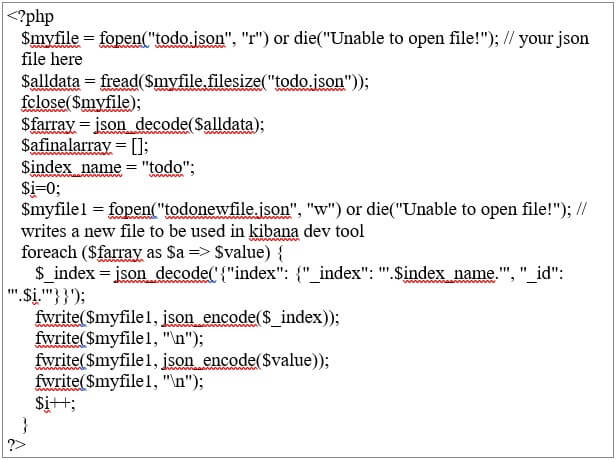

To convert any Elasticsearch-compatible sample JSON file, we have a small PHP code that will output the JSON file to the format that Elasticsearch requires.

PHP Code

We took the todo JSONs file from https:/jsonplaceholder.typicode.com/todos and use PHP code to convert it to the Kibana format we need to upload to.

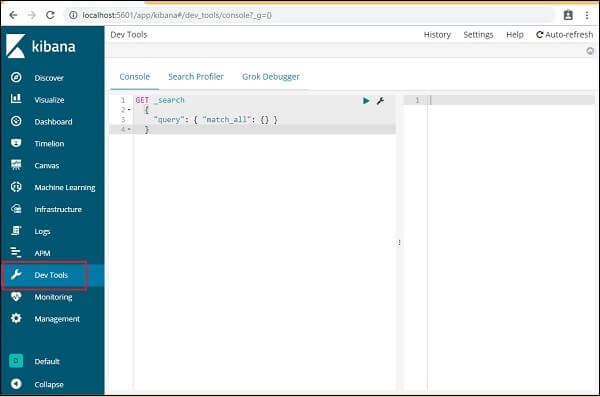

Open the dev tools tab to load the sample data as shown below:

Now we will use the console as shown above. After running it via PHP code, we will take the JSON data that we received.

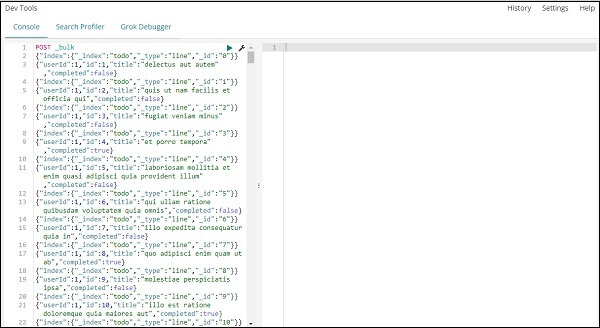

The command to upload the json data to dev tools is:

Note that the index name we are building here is todo.

First, we need to click on the Green button, as we click on the button, our data is finally uploaded.

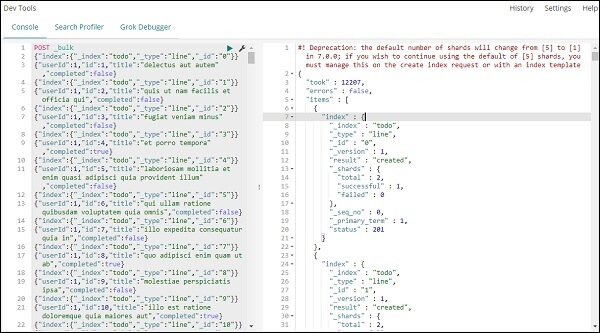

If the user wants, one can also check or test that the index is created or not in our Elasticsearch.

To verify it, use the reference below for your help.

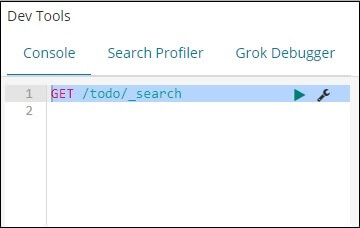

We can also check for the same in the dev tool also.

The command we will use is:

If we want to search for anything in our Index: todo, then we can also get it done by the help of the command given below.

Note: The following commands must be written in the dev tool.

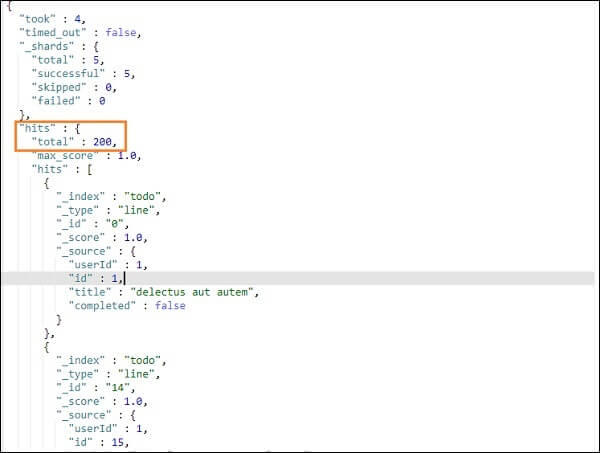

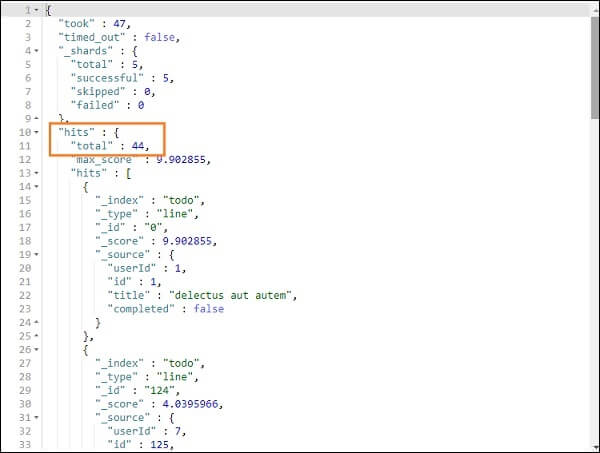

The output of the command is shown in the following screenshot.

It will contain all the records that are present in the todo index. The total number of records that we will get is 200.

Record searching in the todo index

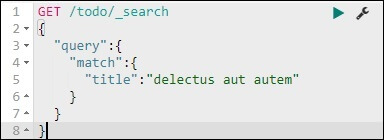

For searching a particular record in the index, we can utilize the following code:

We can also fetch the records according to our need that matches the title we gave.