PySpark Profiler

PySpark supports custom profilers that are used to build predictive models. The profiler is generated by calculating the minimum and maximum values in each column. The profiler helps us as a useful data review tool to ensure that the data is valid and fit for further consumption.

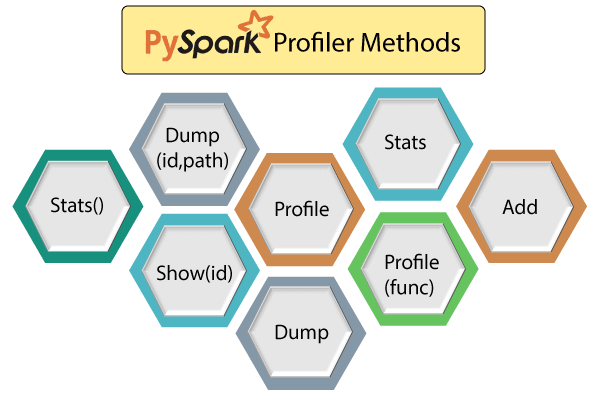

The custom profiler has to define some following methods:

- Add

The add method is used to add profile to the existing accumulated profile. User should choose profile class at the time of creating a SparkContext.

Output:

[0, 4, 7, 9, 8, 15, 20, 18, 21, 25] My custom profiles for RDD:1 My custom profiles for RDD:3

- Profile

It creates a system profile of some sort.

- Stats

This method returns the collection.

- Dump

It dumps the profiles to the path.

- dump(id,path)

This method is used to dump the profile into the path; here an id represents the RDD id.

- Profile(func)

It performs profiling on the function and accepts func as argument.

- show(id)

This function is used to print the profile stats to stdout. Here id is the RDD id.

- stats()

The stats() function returns the collected profiling stat.

class pyspark.BasicProfiler(ctx)

It is a default profiler which is implemented on the basis of cProfile and Accumulator.