Time Series in RNN

In this tutorial, we will use an RNN with time-series data. Time series is dependent on the previous time, which means past values include significant information that the network can learn. The time series prediction is to estimate the future value of any series, let’s say, stock price, temperature, GDP, and many more.

The data preparation for RNN and time-series make a little bit tricky. The objective is to predict the other value of the series, and we will use the past information to estimate the cost at t +1. The label is equal to the input succession one period along.

Secondly, the number of inputs is set to 1, i.e., one observation per time. In the end, the time step is equal to the sequence of the numerical value. If we set the time step to 10, the input sequence will return ten consecutive times.

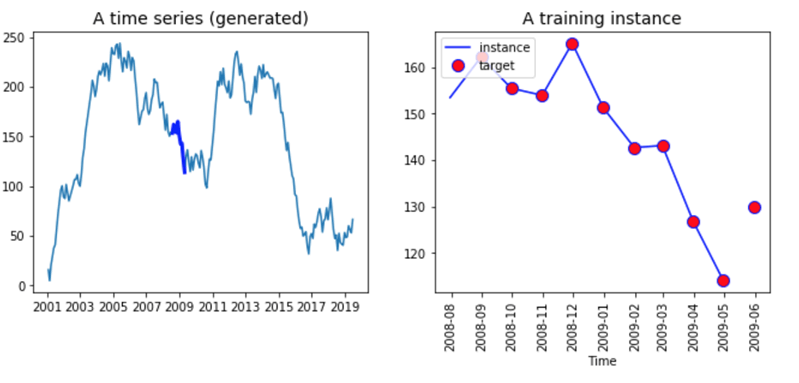

Look at the graph below, and we have to represent the time series data on the left and a fictive input sequence on the right. We create a function to return a dataset with a random value for each day from January 2001 to December 2016

Output:

2016-08-31 -93.459631 2016-09-30 -95.264791 2016-10-31 -95.551935 2016-11-30 -105.879611 2016-12-31 -123.729319 Freq: M, dtype: float64 ts = create_ts(start = '2001', n = 222)

The right part of the graph shows all the series. It starts in 2001 and finishes in 2019. There is no sense to makes no sense to feed all the data in the network; instead, we have to create a batch of data with a length equal to the time step. This batch will be the X variable. The Y variable is the same as the X but shifted by one period (i.e., we want to forecast t+1).

Both vectors have the same length. We can see this in the right part of the graph above. The line represents ten values of the x input, while the red dots label has ten values, y. Note that, the label starts one period forward of X and ends after one period.

Build an RNN to analyze Time Series in TensorFlow

It is time to build our first RNN to predict the series. We have to specify some hyperparameters (the parameters of the model, i.e., number of neurons, etc.) for the model.

- Number of input: 1

- Time step (windows in time series): 10

- Number of neurons: 120

- Number of output: 1

Our network will learn from a sequence of 10 days and contain 120 recurrent neurons. We feed the model with one input.

Before constructing the model, we need to split the dataset into the train set and test set. The full dataset has 222 data points; We will use the first 201 points to train the model and the last 21 points to test our model.

After we define a train and test set, we need to create an object containing the batches. In these batches, we have X values and Y values. Remember that the X value is one period straggle. Therefore, We use the first 200 observations, and the time step is equal to 10. The x_batches object must have 20 batches of size 10 or 1. The size of the Y_batches is the same as the X_batches object, but with a period above.

Step 1) Create the train and test

Firstly, we convert the series into a numpy array; then, we define the windows (the number of time networks will learn from), the number of input, output, and the size of the train set.

After that, we split the array into two datasets.

Step 2) Create the function return X_batches and y_batches

We can create a function that returns two different arrays, one for X_batches and one for y_batches. To make it easier.

Let’s make a function to construct the batches.

Note that, the X_batches are logged by one period (we take value t-1). The output of the function has three dimensions. The first dimensions are equal to the number of batches, the second is the size of the windows, and the last one is the number of input.

The tricky part of the time series is to select the data points correctly. For the X data points, we choose the observations from t = 1 to t =200, while for the Y data point, we return the observations from t = 2 to 201. Once we have the correct data points, it is effortless to reshape the series.

To construct the object with the batches, we need to split the dataset into ten batches of the same length. We can use the reshape method and pass -1 so that the series is the same as the batch size. The value 20 is the number of comments per batch, and 1 is the number of inputs.

We need to do the same step for the label.

Note that we need to shift the data to the number of times we want to forecast. For instance, if we want to predict one time, then we shift the series by 1. If we want to forecast two days, then shift the data by 2 points.

Now the function is defined, we call it for creating the batches.

We can print the shape to make sure the dimensions are correct.

We need to create the test set with only one batch of data and 20 observations.

Note that our forecast days after days, it means the second predicted value will be based on the actual value of the first day (t+1) of the test dataset. The true value will be known.

If you want to forecast t+2, we need to use the predicted value t+1; if you’re going to predict t+3, we need to use the expected value t+1 and t+2. It makes it is difficult to predict precisely “t+n” days.

Our batch size is ready, we can build the RNN architecture. Remember, we have 120 recurrent neurons.

Step 3) Build the model

To create the model, we need to define three parts:

- The variable with the tensors

- The RNN

- The loss and optimization

1. Variables

We need to specify the X and y variables with an appropriate shape. This step is trivial. The tensors are the same dimension as the objects X_batches and the object y_batches.

For instance, the tensors X is a placeholder has almost three dimensions:

- Note: size of the batch

- n_windows: Lenght of the windows.

- n_input: Number of input

The result is:

2. Create the RNN

In the second part, we need to define the architecture of the network. As before, we use the object BasicRNNCell and the dynamic_rnn from TensorFlow estimator.

The next part is trickier but allows faster computation. We need to transform the run output to a dense layer and then convert it to has the same dimension like the input field.

3. Create the loss and optimization

The model optimization depends on the task which we are performing.

This difference is important because it can change the optimization problem. The optimization problem for a continuous variable use to minimize the mean square error. To construct these metrics in TF, we can use:

The enduring code is the same as before; we use an Adam optimizer to reduce the loss.

We can pack everything together, and our model is ready to train.

We will train the model using 1500 epochs and print the loss every 150 iterations. Once the model is trained, we evaluate the model on the test set and create an object containing the prediction.

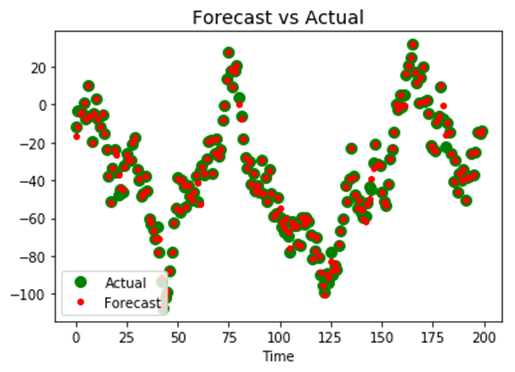

At last, we can plot the actual value of the series with the predicted value. If our model is corrected, the predicted values should be put on top of the actual values.

As we can see, the model has room of improvement. It is up to us to change the hyper parameters like the windows, the batch size of the number of recurrent neurons in the current files.

A recurrent neural network is an architecture to work with time series and text analysis. The output of the previous state is used to conserve the memory of the system over time or sequence of words.

In TensorFlow, we can use the be;ow given code to train a recurrent neural network for time series:

Parameters of the model

Define the model

Constructing the optimization function

Training the model