95

Spark Filter Function

In Spark, the Filter function returns a new dataset formed by selecting those elements of the source on which the function returns true. So, it retrieves only the elements that satisfy the given condition.

Example of Filter function

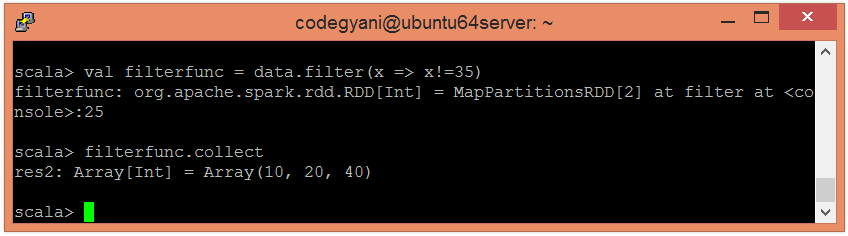

In this example, we filter the given data and retrieve all the values except 35.

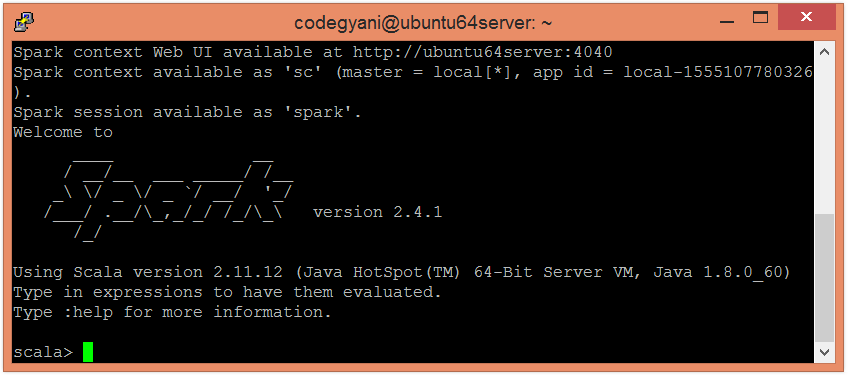

- To open the spark in Scala mode, follow the below command.

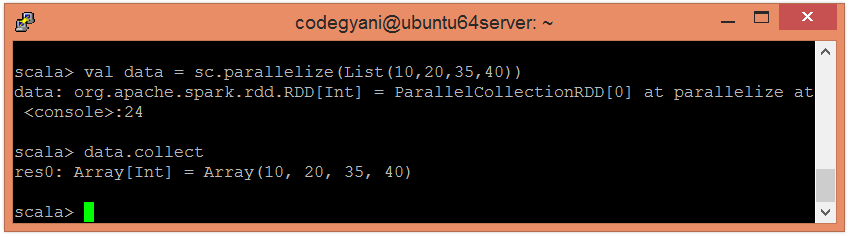

- Create an RDD using parallelized collection.

- Now, we can read the generated result by using the following command.

- Apply filter function and pass the expression required to perform.

- Now, we can read the generated result by using the following command.

Here, we got the desired output.

Next TopicSpark Count Function